Imagine that you want to know the peak category of all hurricanes in the 1800s. If a dataset doesn’t already exist, you would likely need to search through numerous old newspaper clippings to build one yourself. Today, many researchers find themselves in a similar position, resorting to constructing datasets by hand using non-standard data. However, doing so can obviously be incredibly tedious and require sifting through countless disorganised web pages. This long-winded process likely discourages and prevents the fruition of many impactful research papers.

Fortunately, a new avenue to avoid this issue is emerging with the advent of large language models (LLMs). Researchers have already found 25 distinct use-cases for these models in the academic process (Korinek 2023). In this column, I suggest one further: large language models are trained on a vast amount of data, which entails parsing through many information-filled documents. In some cases, we can quickly and accurately extract this information back out.

Examples

To explore the potential of large language models, I used GPT-3.5 and GPT-4–the state-of-the-art models behind ChatGPT. I also used ChatGPT itself. I applied the models to three distinct examples, finding generally that this process excels at locating well-documented data scattered online, like government information, but may struggle with niche or less-documented data, such as specific local weather events.

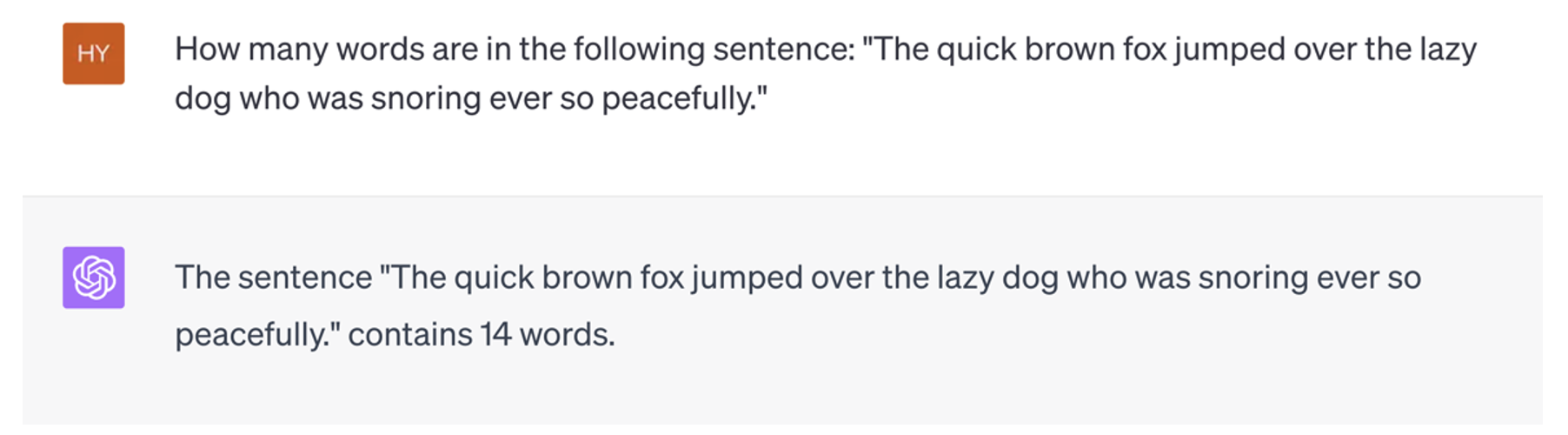

Figure 1 ChatGPT failing to count the words in a sentence

The limitations of GPT-3.5 and GPT-4 extend to computation based on online data; for example, they may be able to locate a calendar of rainy days in a year but cannot count them. It’s easy to see this mathematical inability by asking any GPT to count the words in a sentence, which it regularly fails.

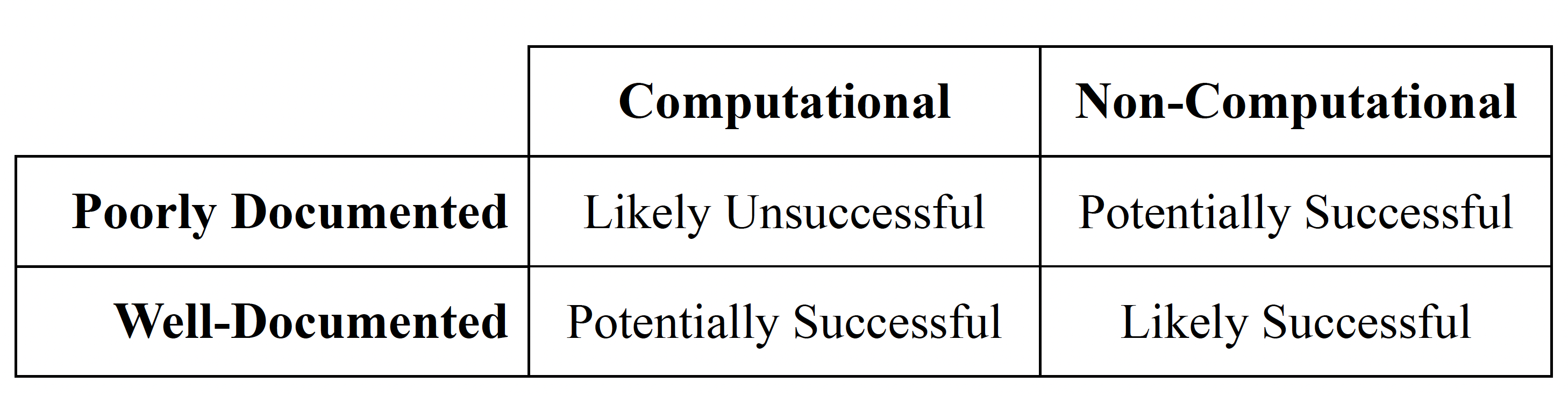

Table 1 Summary table for when GPT may succeed

Notes: Additionally, whenever you have flexibility for incomplete data, GPT is more likely to be a successful tool

Despite these limitations, these models are more useful if incomplete answers are acceptable. As long as the uncertainty isn’t correlated with your variables of interest, you should craft prompts that ask GPT to indicate uncertainty, although this isn't fool-proof, and validation is crucial. Nonetheless, prompting for uncertainty can help enable the creation of a dataset of confident, accurate responses.

Example 1: Federal Deposit Insurance Corporation failed bank dataset

The Federal Deposit Insurance Corporation (FDIC) failed bank dataset keeps track of all official bank failures, including the location of the bank, the date of closing, and acquiring institution.

Utilising the web-browsing version of GPT-4 (as in the version which itself can browse the web), I randomly selected 20 banks from the dataset. Simply by feeding the name of the bank into the prompt, “When did [insert bank name] fail and who acquired it?” I was able to successfully recover the dates of closure and acquirer of every bank in a set of 20 randomly selected banks.

Additionally, the web-version of GPT-4 provides a reference for its output. For many of the predictions, GPT-4 sourced data from the FDIC’s official web page on the specific closure. In certain instances, the model even pulled directly from the publicly available database on the FDIC’s website. Of course, this example is a bit pointless as the data is so easily accessible, but it does demonstrate a straightforward initial attempt at applying this method.

Example 2: Determining industry type

The previous example looked at reconstructing publicly available datasets, but does this approach hold up when there isn’t some pre-existing dataset?

For a recent paper analysing the impacts of layoffs on crime, I utilised a national layoff dataset. However, for my empirical approach, I only wanted to consider companies which were manufacturing-oriented in effort to reduce the likelihood that a company was strongly impacted by local crime, like in the case of hotels. Unfortunately, the layoff dataset did not provide industry for most entries. As a result, I turned to ChatGPT.

I utilised GPT-3.5-Turbo (equivalent to ChatGPT), and I asked it the following prompt:

“Is [business name] in [town of business], [state of business] a manufacturing company? If it manufactures at all or manufactured in the past, only say 'yes'. If it does not manufacture, only say 'no'. If you have insufficient information, only say 'unsure'. Don't add any other context.”

This prompt allowed me to create clear outputs from the OpenAI API where I could then apply simple Python string functions and if-statements to sort the data.

An important part of my prompt is that the model can say it is “unsure.” This allowed me to prevent hallucinations, which are confident but inaccurate answers from the model. I was running county-level difference-in-differences regressions, so I simply removed any counties from the control of “no manufacturing closures” if they had any unclassified closures. This allowed me to be certain I was comparing counties which had manufacturing closures to those which had no manufacturing closures at all.

In a random sample of 30 of these outputs, ChatGPT was 100% accurate when it was confident (‘yes’ or ‘no’ response). In another random sample of 20 of these outputs, again ChatGPT was 100% accurate.

Example 3: Mayoral political affiliation in 2015

For this example, I took a random sample from a list of cities in the US and asked GPT models for the mayor in March of 2015 and for the mayor’s political affiliation.

Tasking ChatGPT with this may seem like a simple task, but it represents an incredibly useful assessment tool of a model's ability to complete dataset construction. The mayor of a town is public knowledge that should be well-recorded. However, it can be unclear on the internet around a transition of power. This means ChatGPT will be tasked with more than simple regurgitation.

Furthermore, to test ChatGPT’s skill at retrieving harder-to-find information, you can see how it performs as the population of the towns it analyses decreases. Towns with smaller populations are likely to have less information online, making it more difficult for ChatGPT to know the information. Finally, to the best of my knowledge and searching, there is no online database of all mayors and their political affiliations in the US, meaning this assessment tool is truly testing what GPT models know from scattered, non-standard data.

GPT-3.5

For this article, a database of 30,844 US cities was restricted to cities with populations of 100,000 or more. Of these cities a random sample of 20 cities were chosen, to which ChatGPT (GPT-3.5 version) was asked to name the mayor in March of 2015 and to name their political affiliation.

Ultimately, ChatGPT accurately identified the names and political affiliations of 18 out of 20 mayors. The first time it failed was when it gave a name for a mayor but in the same response stated that the municipality doesn’t actually have a mayor (which is true). Here, editing the prompt may have been able to prevent the error. And the second time it was wrong, it stated the mayor of the city later in the year rather in March as it was an election year. These examples might have been avoided by planning this data construction better. First, I could have found the cities which had elections in 2015 and removed those or handled them manually. Additionally, I could have avoided that first error by asking which localities have a mayor at all.

This task was also performed with weaker constraints on population, instead looking at a random sample of cities with over 40,000 residents. We see ChatGPT perform weaker here with it correctly identifying only 16 out of the 20 town mayors. However, I also saw a clear example of how ChatGPT could be surpassing humans in efficiency. It took ChatGPT less than a minute to output any one answer (often just a few seconds). And in one example, it almost instantly stated the correct mayor of Florence, Alabama and his political affiliation while it took me nearly ten minutes of searching to verify its claim.

GPT-4

For the 40,000-resident cutoff, the process was repeated after switching to the GPT-4 version of ChatGPT. There was a clear improvement. Although, GPT-4 was unsure for many more examples, when it was confident, it was perfectly accurate. This means GPT-4 might be better suited when you are okay with incompleteness but desire perfect accuracy.

Method

To apply this same approach to your research is relatively easy when you have a well-suited project. The steps are as follows:

- Find a list of objects for which you need information.

- Identify the information required.

- Construct a prompt to request the information.

- Write a few lines of code using the OpenAI API to feed the prompt to a GPT model of your choice. Loop through this for all your objects.

- Manually validate randomly selected outputs.

- If accuracy is not at a desired level, alter the variable of interest or revise the prompt and try again.

Conclusion

This method of dataset construction is brand new and largely untested. The size and complexity of these models make it impossible to complete a full analysis on their capabilities, but these examples are exciting, so I encourage you to explore these tools yourself. And, given the significant benefits they may bring to your research, embracing this opportunity is undoubtedly worth considering.

References

Korinek, A (2023), “Language models and cognitive automation for economic research”, NBER Working Paper 30957.