Creativity is important, but under-studied in economics. Millions of people in the US alone work in jobs where creativity is essential, for example in research, engineering, professional services, media, and the arts (BLS 2010). These industries are engines of innovation and growth in developed economies (Florida 2002). CEO surveys also show that executives regularly list the creativity of their workers and their ability to innovate as top concerns (Mitchell et al. 2012, 2013, 2014). And companies such as IDEO have popularised the concept of 'design thinking' in business and education (Brown 2008).

In many of these industries, creativity often occurs in a competitive environment: firms pitch against each other for new clients and contracts, workers compete for promotions or praise, and entrepreneurs vie for funding. Given the amount of tournament-like competition in these settings, it is important to understand how competition affects the output of creative workers.

Logo design competitions: Creativity in action

In recent work (Gross 2018), I have studied the effects of competition on creative output in the context of logo design competitions, which feature creative professionals competing for substantive stakes. These competitions have become common in professional graphic design. They resemble a standard request for proposals (RFP). A client issues a request for submissions and awards a prize (or contract) to the entry it considers to be best.

In these contests, a client identifies itself, posts a project brief describing (loosely) what it is looking for, fixes a prize and a deadline, and then opens the contest to competition by freelance designers. While a contest is running, designers can submit as many designs as they want, and at any time they want. During the process, the client can give a star rating of between one and five to these submissions. Participants see ratings on their own submissions and the distribution of ratings at large.

These contests typically run for seven to ten days, offer prizes of around $200 to $250, and attract around 35 participants and 95 submissions. Ratings are also highly informative of a given submission’s chances of winning. Roughly two-thirds of designs get rated, but only around 3% receive the top rating. Most importantly, the data include the designs themselves, enabling me to study the choices made with each submission.

The theoretical view

These competitions differ from the canonical model of tournaments in that the designers not only have to decide how many submissions to enter into a contest, but also what to create with each one. This is where they use creative decision-making. We can see designers in these competitions sometimes iterating on a single idea, and sometimes branching out in new directions.

To structure these observations, I first introduce a simple theoretical framework in which agents compete for a winner-take-all prize by submitting designs which can either be an incremental modification of their earlier work ('tweak') or radically different ('original' or 'experiment'). In the model, this is a choice over risk: the agent can either select the safe tweak, or the risky experiment.

I then examine how a given agent’s incentives for each choice vary as competition intensifies. In this context, the model suggests that increasing competition will make the risky, original route more attractive than tweaking, up to a point. When competition becomes too severe, the returns to effort of either type become smaller, and agents will stop submitting designs altogether.

Empirical findings

To measure creativity, I use image comparison algorithms similar to those used by commercial content-based image retrieval software (such as Google Image Search) to calculate similarity scores between pairs of images. The algorithms make pairwise comparisons of structural features and return similarity scores between 0 (no match) and 1 (a perfect match).

Figure 1 shows three designs entered into a contest by the same designer, in order.1

- Designs (1) and (2) have some features in common, but also some fundamental differences, and my primary algorithm gives them a similarity score of 0.3 (my secondary algorithm score was 0.5).

- Designs (2) and (3) have far more in common, and this pair receives a similarity score of 0.7 (secondary algorithm, 0.9).

Using these measures, I can compute the maximal similarity of each design to any prior submissions by the same designer, as well as to those by competitors. When this maximal similarity is low, a design is substantively original.

Figure 1 Illustration of image comparison algorithms

Notes: Figure shows three designs entered in order by a single designer in a single contest. The primary (perceptual hash) algorithm awarded a similarity score of 0.313 for designs (1) and (2) and a score of 0.711 for (2) and (3). For the secondary (difference hash) algorithm, scores were 0.508 for (1) and (2), and 0.891 for (2) and (3).

Client ratings then provide information (both to participants and to me, as the researcher) on individual performance, and the quality of competition at any point. The analysis compares submissions from a given participant with a high versus low rating, facing high- versus low-rated competition. This empirical strategy exploits naturally occurring, quasi-random variation in the timing and visibility of clients’ ratings. This allows me to compare designers’ responses to information they observe at the time of design against that which is absent or not yet available.

I find that, absent competition, top ratings cause participants to greatly reduce the production of original ideas. This effect is strongest when a designer receives a first five-star rating – the next entry will typically be a near replica of the highly-rated work. Top-rated competition, however, reverses these effects by half or more. The magnitude of this effect is large enough to be visible to the naked eye: it is like going from the similarity of designs (2) and (3) in Figure 1, to that of designs (1) and (2). This is econometrically identified, and robust to alternative measures of the key variables.

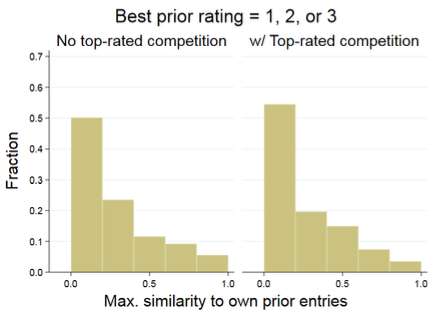

These patterns can be seen directly in the raw data. Figure 2 shows the distribution of designs’ maximal similarity to prior work by the same author in the same contest, conditional on the ratings the designer receives, and the presence of top-rated competition. Designers with a five-star rating are significantly more likely to tweak than those with lower ratings, but those who face five-star competition enter significantly more original work than they otherwise would.

Figure 2 Similarity to prior submissions, conditional on ratings and competition

Notes: Figure shows distribution of designs’ similarity to prior entries, conditional on the designer’s highest rating and the presence of top-rated competition. Each observation is a design, and the plotted variable is that design’s maximal similarity to any previous submission by the same designer in the same contest, taking values in [0,1], where a value of 1 indicates the design is identical to one of the designer’s earlier submissions.

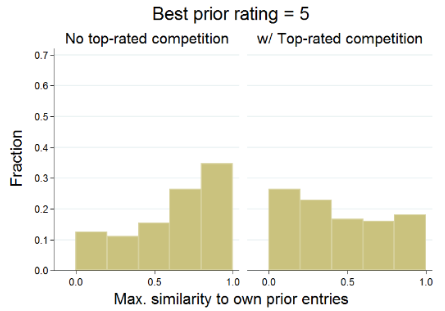

But this is not the whole story – these results are by construction conditioned on making a submission. Designers also have the option to stop bidding entirely. To account for this possibility, I discretise outcomes and model the likelihood that a designer enters a tweak, an original design, or stops participating, estimated as a function of that designer's contemporaneous probability of winning. Figure 3 illustrates the results.

- When competition is weak, a designer will tend to enter tweaks.

- As it strengthens, the designer will be more likely to enter original work.

- When competition is severe, designers most often stop submitting designs.

The tendency to produce original work is greatest when facing roughly 50-50 odds of winning – in other words, with one comparable competitor.

Figure 3 Probability of tweaks, original designs, and abandonment

Notes: Figure plots the probability that a designer does one of the following on (and after) a given submission, as a function of their contemporaneous win probability: tweaks and then enters more designs (Panel A), experiments and then enters more designs (Panel B), or stops investing in the contest (Panel C). These probabilities are estimated as described in the paper, and the bars around each point provide the associated 95% confidence interval.

Lessons and implications

This adds to evidence on the effects of competition on creative output. Whereas economists argue that competition can motivate the kind of risk-taking that is characteristic of inventive activity (Cabral 2003, Anderson and Cabral 2007), many psychologists claim that extrinsic pressures like competition will stifle creativity by crowding out intrinsic motivation (for a review, see Amabile and Hennessey 2010) or by causing agents to choke (Ariely et al. 2009).

Lab-based studies of creativity are similarly mixed. The creativity literature has not so far studied the nuance that competition is not strictly a binary, yes-or-no condition, but rather can vary in intensity across treatments. My research shows the effects depend on the intensity of competition.

The finding that balanced competition generates creativity has implications for managers of creative industries and for procurement in organisations. Although intrinsic motivation can be valuable, this paper shows that winner-takes-all competition can motivate creativity, if properly managed. We can speculate that a few (perhaps even one) competitors of similar ability is enough to motivate creativity, whereas many strong competitors may discourage this effort.

References

Anderson, A and L Cabral (2007), “Go for broke or play it safe? Dynamic competition with choice of variance”, RAND Journal of Economics 38(3): 593-609.

Ariely, D, U Gneezy, G Loewenstein, and N Mazar (2009), “Large Stakes and Big Mistakes.” Review of Economic Studies 76: 451-469.

Brown, T (2008), “Design Thinking.” Harvard Business Review, June.

Bureau of Labor Statistics, (2010), "County Business Patterns".

Cabral, L (2003), “R&D Competition When Firms Choose Variance”, Journal of Economics & Management Strategy 12(1): 139-150.

Mitchell, C, R L Ray, and B van Ark (2012, 2013, 2014), CEO Challenge Report, The Conference Board

Florida, R (2002), The Rise of the Creative Class, Basic Books.

Gross, D P (2019), “Creativity Under Fire: The Effects of Competition on Creative Production", Review of Economics and Statistics, forthcoming.

Hennessey, B A and T M Amabile (2010), “Creativity”, Annual Review of Psychology 61: 569-598.

Endnotes

[1] The designs in Figure 1 are not necessarily from the platform from which the data in this paper were obtained. To keep the data source anonymous, I have omitted identifying information.