Economics has a long tradition of relying on quantitative models for both presenting its theory and testing it empirically. In fact, Joseph Schumpeter, in the first edition of Econometrica in 1933, described economics as the most quantitative of all sciences. Optimisation is an inherent part of this methodology. In theoretical models, agents are presented as utility maximisers and firms try to maximise profit or to minimise cost. Selecting and estimating models for given data sets amounts to optimisation as well – sums of squares are minimised and likelihoods are maximised so routinely today that often researchers may not even be aware that fitting a model means optimising it.

When building models, economists are often limited by the fact that the model later needs to be solved, ideally in a closed-form. Some researchers have abandoned relying on “representative agents” and opted for more complex models, relying on computer simulations to obtain results. Such agent-based models, if they are to be a viable alternative to more standard approaches, need to be “tuned” such that the results from these models coincide with empirical facts. This, again, is an estimation – and hence optimisation – problem. In this column, we will primarily discuss estimation problems in econometrics, but the problem is much more general and applies to many areas in economics (see Winker (2001), chapter 2).

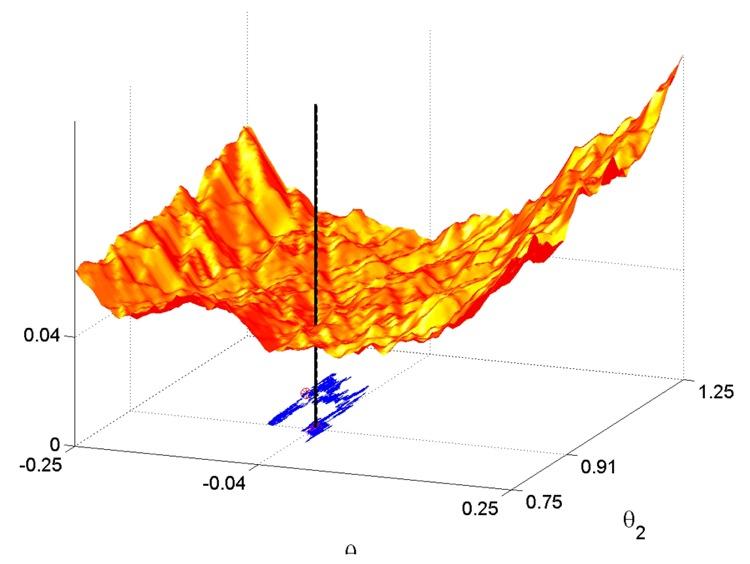

More formally, optimisation problems in estimation and modelling are typically expressed as max f(x) where f may be a likelihood function and x the model’s parameters (of course numerically, max f(x) is the same as min -f(x)). Interestingly, the problem max f(x) is often considered synonymous with the solution xopt, which tacitly assumes that a solution exists and is unique. As McCullough and Vinod (1999, p. 635) state, “Many textbooks convey the impression that all one has to do is use a computer to solve the problem, the implicit and unwarranted assumptions being that the computer’s solution is accurate and that one software package is as good as any other”. Goffe et al. (1994) were amongst the first to recognise that many optimisation problems in econometrics cannot be solved with standard methods because of the existence of multiple optima and discontinuities. These are not pathological examples. These problems even occur for widely used models such as generalised autoregressive conditional heteroscedasticity (GARCH) regressions (see Doornik and Ooms, 2008)). Another example comes from robust (‘resistant’) regression. Figure 1 shows the objective function for estimating a linear regression by minimising the Least Median of Squares (LMS), instead of the Least (Mean of) Squares. The function has many local optima and does not look very smooth.

Figure 1. Least median of squares objective function

These two examples illustrate different problems in optimisation. The GARCH model shows that even widely applied models are not necessarily easy to estimate, and researchers are sometimes not even aware of the computational difficulties. The LMS model serves as an example where possibly superior models are not used because they seem difficult to estimate.

Standard methods, for example for maximum likelihood estimation, are essentially hill-climbing algorithms. They start with a given solution, and then proceed deterministically into the direction where the likelihood increases, for instance by following the direction of the gradient. It is easy to see that such an algorithm is likely to fail in a situation with many local optima, since it will stop at the first peak. Some methods also require the landscape, i.e. the objective function, to have certain properties like being continuous. If these properties are missing, the algorithm does not work. What is worse, the employed software may not report an error but just return some value.

Heuristic methods

Heuristic methods offer a solution in such cases. Heuristics are not really new in optimisation theory (some of the algorithms go back to the 1960s), but they have only become practically relevant with the enormous increase in computing power over the last two decades or so. The term heuristics actually covers a wide range of different techniques, many of which have been inspired by evolutionary or biological processes. There are, for instance, methods that resemble the evolution of species (e.g., genetic algorithms or differential evolution) or flocks of birds that are looking for food (particle swarm). Gilli and Winker (2008) provide an overview of such methods and their use in econometrics.

Still, there are common principles. Heuristic methods often employ similar strategies to overcome the problem of getting stuck in local optima. One is that while travelling through the search space, they temporarily allow for an impairment of the objective function – the algorithm may also move downhill. This, for instance, is the distinguishing property of simulated annealing, one of the best-known methods. A variant of this principle is to maintain at any point in time a whole population of solutions, some of which are worse than others.

A second principle is the deliberate use of randomness for creating new candidate solutions. Genetic algorithms, for instance, construct new candidates by randomly mixing parent solutions (‘cross over’) and perturbing (‘mutating’) single elements of the resulting child solution.

Let us examine one such algorithm, threshold accepting, in more detail. Threshold accepting builds on a very simple concept in numerical optimisation called local search. A local search starts with a randomly chosen feasible solution and then picks, again randomly, a solution close by as a new candidate solution. If this new solution, called a neighbour solution, is better, the algorithm moves to this new solution. If the neighbour is worse, it stays at the initial solution and selects a new neighbour. The algorithm stops after a preset large number of iterations, or if there was no improvement any more for a number of steps. In a problem with only one maximum, the algorithm will, slowly but surely, move to this optimal point.

Unfortunately, if there is more than one peak, there is no guarantee that local search will find the truly best one. Threshold accepting works just like local search, but with a small difference. When the algorithm evaluates a neighbour solution, it may also accept this new solution if it is worse as long as the impairment is not worse than a small threshold (hence the method's name). This threshold is often set rather generously initially, so that the algorithm may move freely in the search space. Over time, the thresholds are decreased; hence the algorithm gets choosier and finally turns into a local search. Introducing thresholds may seem like a small adjustment to local search (computationally, it is), but it leads to enormous improvements in the optimisation procedure, as the algorithm is now able to walk away from local maxima. In econometrics, threshold accepting has been used in model selection, to determine optimal lag structures in vector autoregression models (both actually discrete problems), or to estimate GARCH models.

This conceptual simplicity is actually a property of many optimisation heuristics – still, the methods work surprisingly well in practice. Unfortunately they are not yet widely applied in economics and econometrics. This neglect may be due to the fact that people are not aware of the inherent complexity of many optimisation problems and the lack of well-documented implementations of heuristics for standard software packages. The Marie Curie Research and Training Network ‘Computational Optimisation Methods in Statistics, Econometrics and Finance’ (www.comisef.eu) aims to contribute to the proliferation of heuristic methods in economics.

References

Doornik, J. and M. Ooms (2008). Multimodality in GARCH regression models. International Journal of Forecasting 24, 432-448.

Gilli, M. and P. Winker (2008). A Review of Heuristic Optimization Methods in Econometrics. Swiss Finance Institute Research Paper No. 08-12

Goffe, W. L., G. Ferrier and J. Rogers (1994). Global optimization of statistical functions with simulated annealing. Journal of Econometrics 60, 65-99.

McCullough, B. D. and H. D. Vinod (1999). The Numerical Reliability of Econometric Software. Journal of Economic Literature 38, 633-665.

Schumpeter, J.A. (1933). The Common Sense of Econometrics. Econometrica 1, 5-12.

Winker, P. (2001). Optimization Heuristics in Econometrics. Wiley, Chichester.