Since at least the 1970s, psychologists have been pointing out that financial incentives can harm performance by crowding out the enjoyment we would otherwise earn while working on a task (Deci 1971). An enjoyable task morphs into one that we do for the money, which crowds out intrinsic motivation. If extrinsic motivation provided by the financial incentive is not strong enough, money rewards result in reduced performance. While not universally accepted, the motivation crowding theory is the default incentive model in psychology and related fields (Engström and Bengtsson 2014, Deserranno 2015, Bowles 2016, Pope and DellaVigna 2016).

A widely cited meta-analysis by Weibel et al. (2010) reports that financial incentives indeed hurt performance in the case of interesting tasks. While economists have long been aware of the psychology theory and evidence (Camerer and Hogarth 1999, Gneezy and Rustichini 2000, Frey and Jegen 2001, Gneezy et al. 2011, Esteves-Sorenson and Broce 2022), few models used in economics allow for motivation crowding. The following statement recently expressed prominently on the website of a leading management consultancy reflects the prior of many economists:

"Generous and specific financial incentives can help drive and sustain a rapid performance improvement" (McKinsey 2022)

In this column, based on a synthesis of empirical studies on the topic (Cala et al. 2022), we argue that empirical evidence, even in economics, does not support the prior. The finding has far-reaching policy consequences: incentives or nudges that rely mainly on financial motives, such as offering money to people for getting their Covid-19 shots, may be less effective than commonly thought.

A meta-analysis

The purpose of our meta-analysis is threefold (Cala et al. 2022). First, we correct the literature for publication bias, which can exaggerate the underlying effect multiplicatively (Ioannidis et al. 2017). Second, we allow for model uncertainty (Steel 2020), which is important given how individual experiments differ. Third, we focus on economics. Existing meta-analyses have focused exclusively or to a large extent on psychology. The economics literature is thus largely unexplored, although researchers have pointed out the vast differences in priors and methodological approaches between economics and psychology experiments when it comes to the effect of money on behaviour (Camerer and Hogarth 1999, Hertwig and Ortmann 2001, Esteves-Sorenson and Broce 2022).

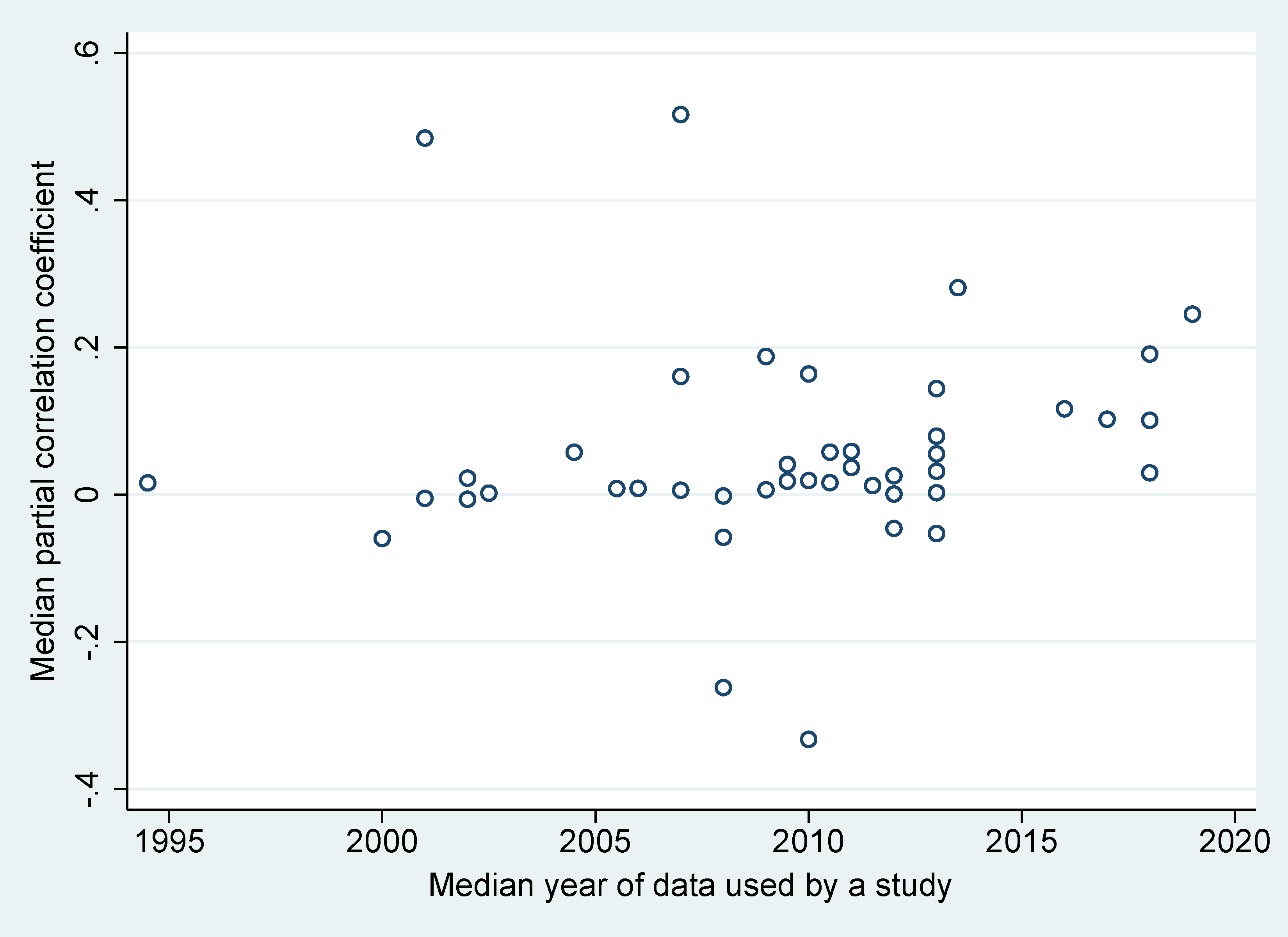

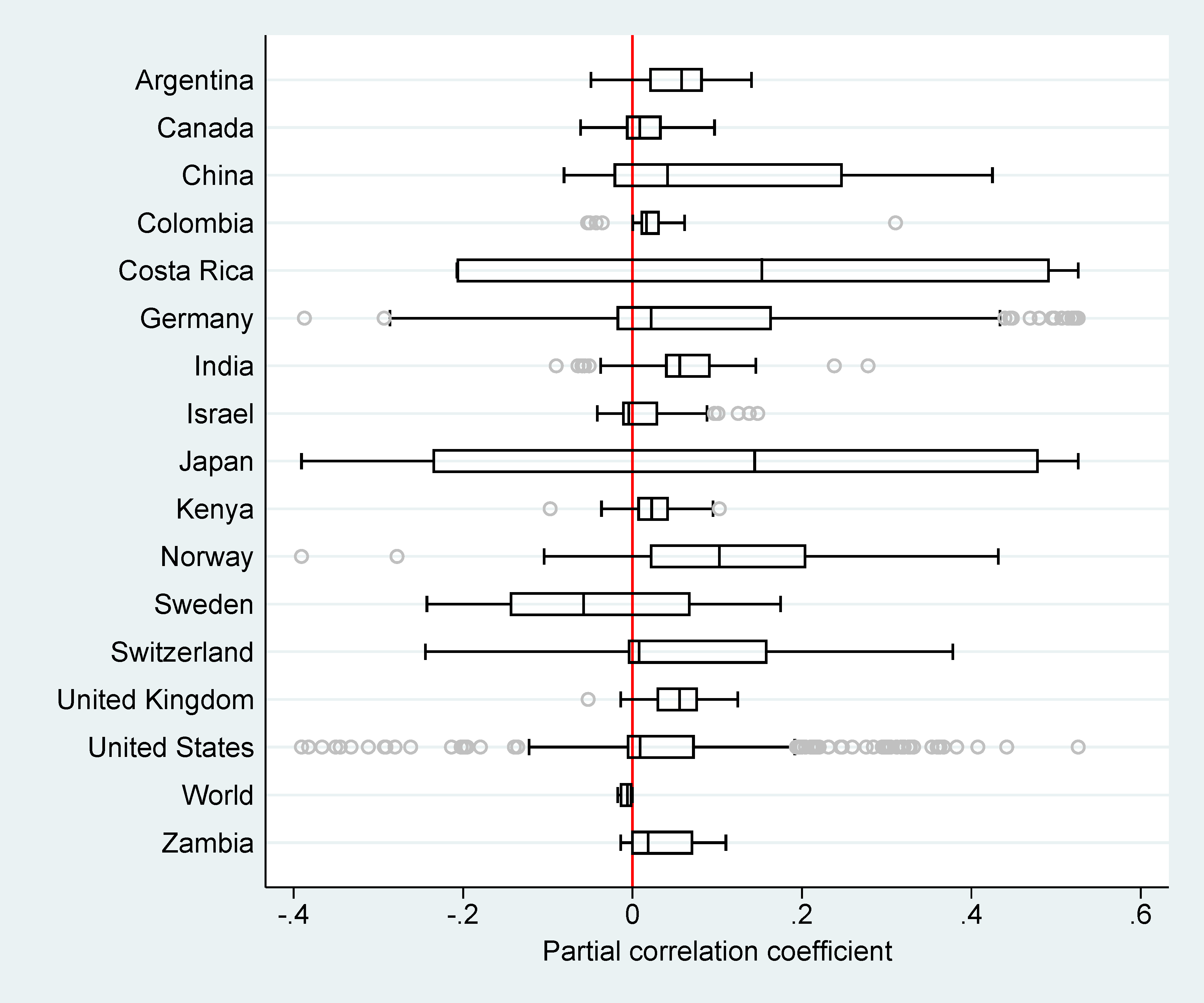

Figure 1 presents a bird’s-eye view of the experimental economics literature on the topic. The median estimates from each study, recomputed to partial correlations for comparability, range commonly between 0 and 0.2, though some studies report correlations of −0.3 or 0.5. The estimates do not converge to a consensus value. Figure 2 shows that results vary across countries. Surprisingly to an economist, estimates are far from being robustly and consistently positive.

Figure 1 Estimated effects of incentives vary across studies, and do not converge to a consensus value

Figure 2 Effects of incentives vary across and within countries, and centre around small positive values

Publication bias

None of the previous meta-analyses in psychology (Jenkins et al. 1998, Condly et al. 2003, Weibel et al. 2010, Garbers and Konradt 2014, Kim et al. 2022) corrected the literature for publication bias. Publication bias arises when some results – typically those that are intuitive and statistically significant – are preferentially selected for publication. Selective reporting can work at the level of entire studies – for example, studies may end up unpublished, forever hidden in a file drawer, because of their insignificant results.

More plausibly, however, selective reporting works as self-censorship practised by the authors themselves (Brodeur et al. 2022). In the context of the incentive performance literature, researchers can, for example, alter the measure of performance they report (Esteves-Sorenson and Broce 2022) or choose a subset of the data until they get a desired outcome.

Selective reporting does not equal cheating and can be unintentional. McCloskey and Ziliak (2019) draw a useful analogy to the Lombard effect in psychoacoustics: speakers involuntarily increase their vocal effort in the presence of noise. In a similar way, researchers may increase their effort to find a plausible estimate when there is noise in their data.

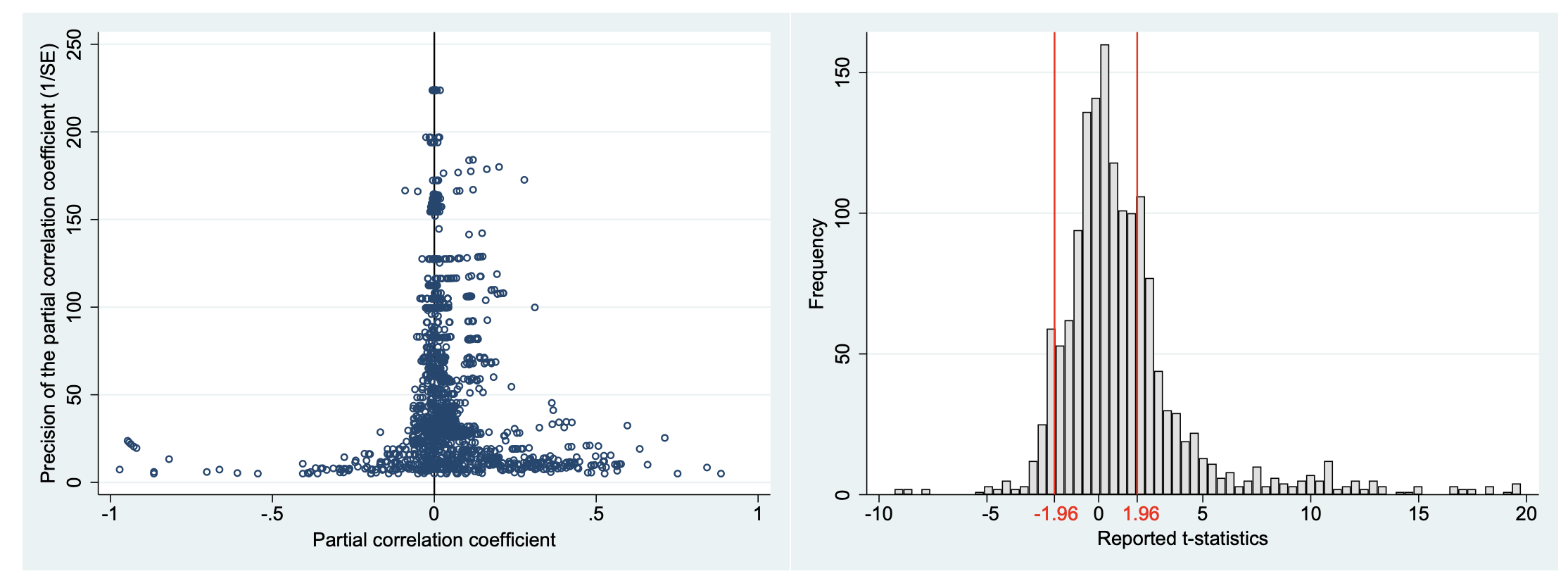

Figure 3 Negative and statistically insignificant estimates are under-reported

Figure 3 shows two graphs used to detect publication bias. The left-hand panel plots estimates on the horizontal axis against their precision on the vertical axis. In the absence of bias, this ‘funnel plot’ should be symmetrical. But we can see that many negative estimates are missing – perhaps not reported because they are not intuitive. In a similar vein, the right-hand panel shows that estimates are just statistically insignificant at the 5% level, and thus with t-statistics just below 1.96 in absolute value, are under-reported.

We use a battery of statistical methods that formalise the ideas of both panels of Figure 3. All methods find evidence of publication bias, which pushes the mean reported estimate upwards. After correction for the bias, the mean experimental result suggests a negligible effect of financial incentives on performance – a striking result to an economist.

Experimental context

The experiments on this topic vary so much that a reader may ask how a mean estimate is informative. Indeed, researchers focus on different definitions of performance: work outcomes, school grades, games, blood donations, among others. The task itself can be appealing or unappealing, cognitive or manual. Outputs can be measured quantitatively or qualitatively.

Reward size and framing also differ across experiments – sometimes only individual people are paid, sometimes the rewards are group-specific. Some experiments are conducted in a lab, most are field studies. Subjects differ in terms of gender, occupation, age, and culture. Various statistical techniques are used to produce the results.

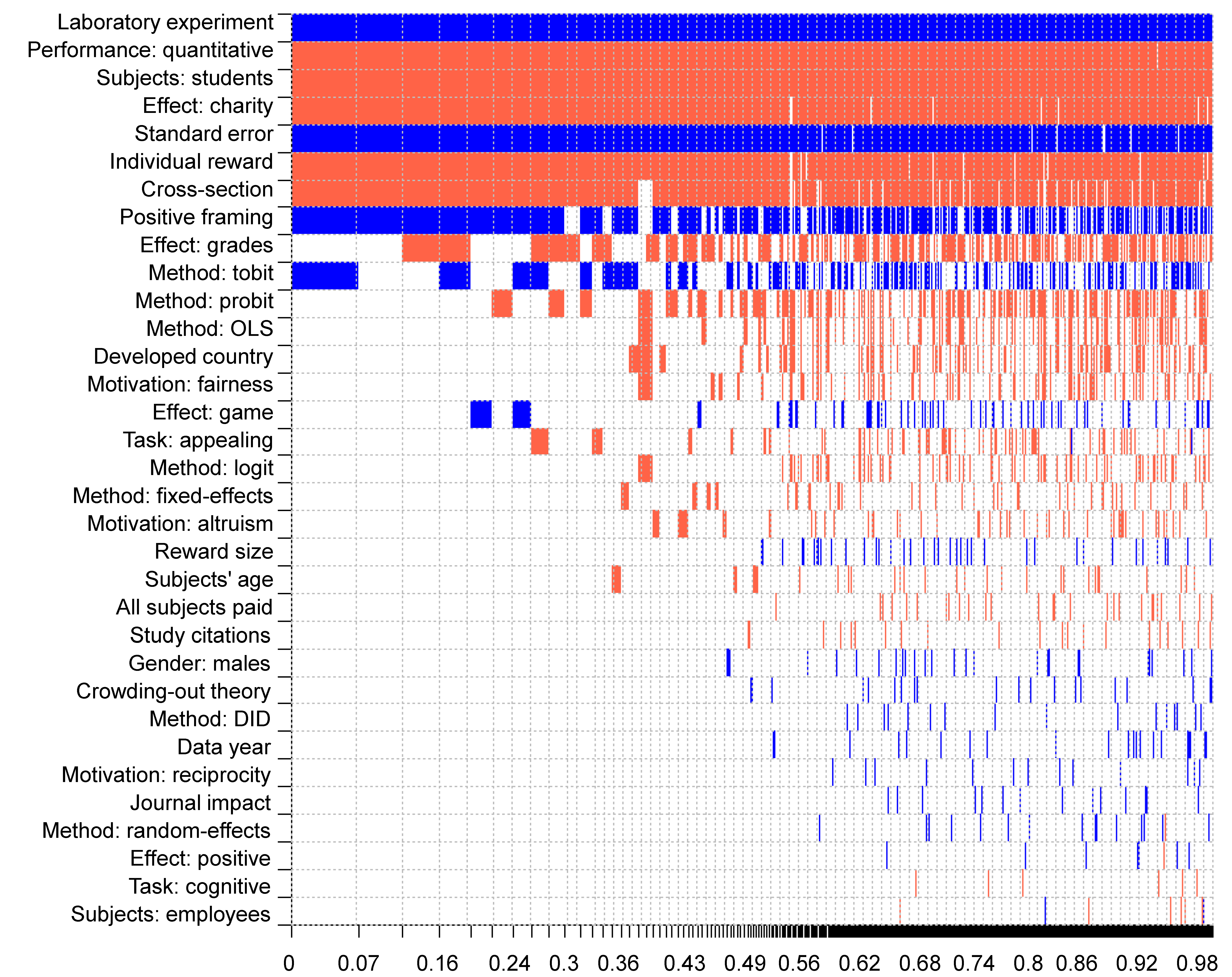

To account for the differences in estimation context, we employ Bayesian model averaging, the natural solution to model uncertainty in the Bayesian framework (Steel 2020). The results suggest that some method choices drive the results systematically, as depicted in Figure 4. The most important drivers of heterogeneity are shown on the top. Blue denotes a positive effect on the estimated incentive-performance nexus, red denotes a negative effect. Columns denote different models, and the horizontal axis measures each model’s importance.

Figure 4 Why estimates of the effect of incentives on performance vary

The composition of the subject pool matters, as does the framing of rewards, individual versus group rewards, and qualitative versus quantitative measurement of output. Financial incentives are even less efficient in improving grades and pro-social behaviour than they are in improving performance at games and work. But, importantly, the differences are small.

The implied correlations for various experimental contexts after correction for bias and accounting for model uncertainty are always statistically insignificant and negligible according to the Doucouliagos (2011) guidelines for the interpretation of partial correlations. The only exception is laboratory experiments, but even here the implied effect is tiny. In Cala et al. (2022) we show the numerical results for different scenarios.

Bottom line

We conclude that, regarding the effect of financial incentives on performance, the experimental economics literature is inconsistent with most models commonly employed in economics.

The results do not fit neatly in the mainstream psychology framework either. The motivation crowding theory assumes that the crowding out of intrinsic motivation happens only in the case of interesting tasks, exactly as reported by Weibel et al. (2010). The problem is that the definition of an interesting task is subjective, and some people will enjoy tasks that others find unappealing.

Another potential explanation is that reward cues distract people from the task itself; a recent meta-analysis shows that this effect can be important (Rusz et al. 2020). The distraction effect can exist for both interesting and uninteresting tasks and is more likely in field settings, where the experimenter does not always have full control over the connection between reward cues and the task itself.

References

Bowles, S (2016), “Moral sentiments and material interests: When economic incentives crowd in social preferences.” VoxEU.org, 26 May.

Brodeur, A, S Carrell, D Figlio, and L Lusher (2022), “Unpacking p-hacking and publication bias”, working paper, University of Ottawa.

Cala, P, Havranek, T, Irsova, Z, Matousek J and J Novak (2022), “Financial Incentives and Performance: A Meta-Analysis of Economics Evidence”, CEPR Press Discussion Paper No. 17680.

Camerer, C F and R M Hogarth (1999), “The effects of financial incentives in experiments: A review and capital-labor-production framework”, Journal of Risk and Uncertainty 19(1): 7–42.

Condly, S. J, R E Clark, and H D Stolovitch (2003), “The Effects of Incentives on Workplace Performance: A Meta-analytic Review of Research Studies”, Performance Improvement Quarterly 16(3): 46–63.

Deci, E L (1971), “Effects of externally mediated rewards on intrinsic motivation”, Journal of Personality and Social Psychology 18(1): 105–115.

Deserranno, E (2015), “Financial incentives as signals: Evidence from a recruitment experiment”, VoxEU.org, 11 Feb.

Doucouliagos, H (2011), “How large is large? Preliminary and relative guidelines for interpreting partial correlations in economics”, Working Papers 5/2011, Deakin University.

Engström, P and N Bengtsson (2014), “The audit society and its enemies”, VoxEU.org, 28 Oct.

Esteves-Sorenson, C and R Broce (2022): “Do Monetary Incentives Undermine Performance on Intrinsically Enjoyable Tasks? A Field Test”, Review of Economics and Statistics (forthcoming).

Frey, B S and R Jegen (2001), “Motivation Crowding Theory”, Journal of Economic Surveys 15(5): 589–611.

Garbers, Y and U Konradt (2014), “The effect of financial incentives on performance: A quantitative review of individual and team-based financial incentives”, Journal of Occupational and Organizational Psychology 87(1): 102–137.

Gneezy, U, S Meier, and P Rey-Biel (2011), “When and Why Incentives (Don’t) Work to Modify Behavior,” Journal of Economic Perspectives 25(4): 191–210.

Gneezy, U and A Rustichini (2000), “Pay enough or don’t pay at all”, The Quarterly Journal of Economics 115(3): 791–810.

Hertwig, R and A Ortmann (2001), “Experimental practices in economics: A methodological challenge for psychologists?”, Behavioral and Brain Sciences 24(3): 383–451.

Ioannidis, J P, T D Stanley, and H Doucouliagos (2017), “The Power of Bias in Economics Research”, Economic Journal 127(605): F236–F265.

Jenkins, G D, A Mitra, N Gupta, and J D Shaw (1998): “Are financial incentives related to performance? A meta-analytic review of empirical research”, Journal of Applied Psychology 83(5): 777–787.

Kim, J H, B Gerhart, and M Fang (2022), “Do Financial Incentives Help or Harm Performance in Interesting Tasks?”, Journal of Applied Psychology 107(1): 153–167.

McCloskey, D N and S T Ziliak (2019), “What Quantitative Methods Should We Teach to Graduate Students? A Comment on Swann’s Is Precise Econometrics an Illusion?”, The Journal of Economic Education 50(4): 356–361.

McKinsey (2022), “The powerful role financial incentives can play in a transformation”, 19 January.

Pope, D G and S DellaVigna (2016), “Inducing effort with behavioural intervention”, VoxEU.org, 29 May.

Rusz, D, M L Pelley, M Kompier, L Mait, and E Bijleveld (2020), “Reward-driven distraction: A meta-analysis”, Psychological Bulletin 146(10): 872–899.

Steel, M F J (2020), “Model Averaging and its Use in Economics”, Journal of Economic Literature 58(3): 644–719.

Weibel, A, K Rost, and M Osterloh (2010), “Pay for Performance in the Public Sector—Benefits and (Hidden) Costs”, Journal of Public Administration Research and Theory 20(2): 387–412.