Public awareness about the possibilities of large language models (LLMs) to perform complex tasks and generate life-like interactions has exploded in the past year. This has led to an immense amount of media attention and policy debate about their impact on the economy and on society (Ilzetski and Jain 2023). In this column, we focus on the potential role of LLMs as a research tool for economists using text data in their research. We first lay out some key concepts needed to understand how such models are built, and then pose four key questions that we believe need to be addressed when considering the integration of LLMs into the research process. For further details, we refer readers to our recent review article on text algorithms in economics (Ash and Hansen 2023).

What is a large language model?

Large language models are essentially predictive models for sequential data. Given an input sequence of text, they can be targeted towards predicting randomly deleted words – akin to filling in gaps in a time series – or the most likely subsequent word, mirroring the process of forecasting the next data point in a time series. Consider predicting the word that underlies [MASK] in the following sentences:

- As a leading firm in the [MASK] sector, we hire highly skilled software engineers.

- As a leading firm in the [MASK] sector, we hire highly skilled petroleum engineers.

Most humans would predict that the word underlying [MASK] in the first sentence relates to technology, and that the word underlying [MASK] in the second sentence relates to energy. The key words informing these predictions are ‘software’ and ‘petroleum’, respectively. Humans intuitively know which are the important words in the sequence they must pay attention to in order to make accurate forecasts. Moreover, these words may lie relatively far from the target word to predict, as in the above examples.

An algorithmic breakthrough for identifying important context words in long sequences is attention. Each word in a sequence is initially given a vector representation. Then these representations are updated by combining all the initial vectors with different weights. So, in the above example, an initial vector representation of [MASK] can be updated by placing relatively high weight on the word ‘software’ and low weights on other words which are less important.

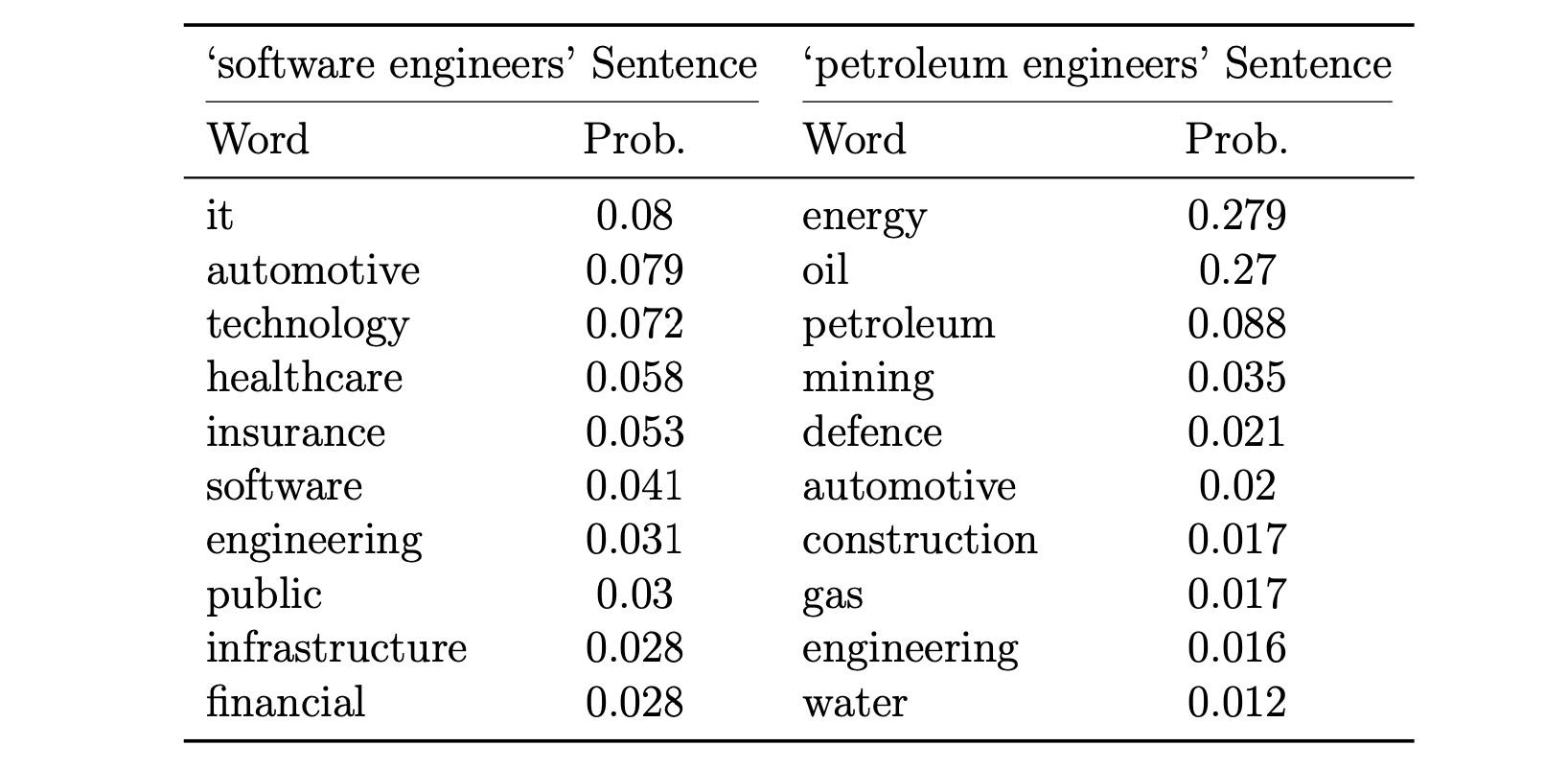

LLMs are neural networks with many separate attention operations that allow for rich and high-order interactions among words. The parameters of these models are then adjusted so that the models can successfully complete the given forecasting tasks. Table 1 shows the most likely masked words in each example sentence according to a particular LLM (Hansen et. al. 2023). The growth in the size of LLMs is driven primarily by allowing further attention operations and longer input sequences for informing predictions.

Table 1 Predictions for masked words in example sentences

More recent models, most well-known among them ChatGPT, are further adjusted to incorporate human feedback through human alignment. Since LLMs are forecasting models, any particular input sequence will generate outputs according to an estimated probability distribution. Humans can assess these various outputs and rank them according to their perceived reasonableness or relevance. In response, ChatGPT and similar models recalibrate the forecasting model to assign higher probabilities to outcomes favoured by humans and lower probabilities to less preferred outcomes (via reinforcement learning). This process effectively optimises the utility of the humans tasked with ranking model outputs, which enhances the overall performance and efficiency of the model.

Finally, many researchers further fine-tune generic language models to perform non-language-based predictive tasks. In this workflow, one begins with a previously estimated, or pre-trained, LLM and then adjusts the model parameters to perform whatever predictive task might be of interest. Examples from the economics literature include Bajari et al. (2021), which uses product description text to predict product prices, and Bana (2022), which uses job posting text to predict wages. LLMs typically achieve outstanding performance in such predictive exercises.

Key questions for the use of LLMs in economic research

1. What role should be placed on replicability?

Reproducibility and replicability are cornerstones of robust economic research, a stance that remains pertinent in the context of AI’s increasing integration into the field. These principles ensure the reliability of the findings and the soundness of the methodologies employed. As LLM pre-training is predominantly being undertaken by large corporations due to the substantial resources involved, there are legitimate concerns around research transparency and replicability.

Adding to these concerns is the recent tendency for these organisations to withhold critical information on model architectures and training data. This lack of transparency hinders the ability of researchers to verify results and build upon existing work, contravening the values that underpin scientific research – open inquiry and the accumulation of shared knowledge.

One norm that the economics community might follow is to only publish research that utilises relatively open-source models. This approach would encourage transparency and reproducibility in economic research involving LLMs and AI more generally. By advocating for open access to resources necessary for independent validation or extension of research findings, the academic community can foster an environment that respects traditional research principles while also adapting to the distinctive challenges of AI development in economics.

2. In what ways should LLMs be adapted to economic research?

Adapting LLMs for economic research can open up new possibilities for analysis and prediction. As mentioned above, one approach involves fine-tuning LLMs for specific prediction tasks relevant to economics, such as financial or macroeconomic data forecasting. Another way to enhance LLMs’ effectiveness in economics is by pre-training them on domain-specific text. For example, having an LLM learn from the full corpus of working papers from economic research hubs like the National Bureau of Economic Research or the Centre for Economic Policy Research could imbue it with a more nuanced understanding of economic discourse and concepts.

Reinforcement learning exercises could also be modified to align more closely with the utility functions of economists. This could potentially result in LLMs that provide more insightful responses to high-level economic queries, such as those concerning the relationships between economic shocks. Such an approach could push the boundaries of what is currently possible with LLMs in economic research, facilitating a deeper exploration of complex economic phenomena.

Finally, a crucial question remains: are the processes of pre-training, fine-tuning, and alignment complements or substitutes? Understanding the relationships among these different aspects of training LLMs is vital for leveraging their full potential in economic research. As we continue to explore these questions and develop new methodologies, the role of LLMs in economics promises to become even more prominent and transformative.

3. What is the trade-off between transparency and prediction success?

The balance between transparency and prediction success in economic research presents a complex trade-off. On one hand, simpler models like bag-of-words or dictionary methods – which only focus on word frequencies while ignoring context – may offer less predictive power compared to LLMs. However, they offer benefits like lower computational costs, ease of interpretation, and better-understood statistical properties, contributing to greater transparency. Their simplicity allows researchers to understand the inner workings of the model more clearly, offering insights into why a particular prediction was made.

On the other hand, developing and using LLMs generally requires significant computational resources and often a large, specialised research team. While they provide superior predictive capabilities and can capture complex patterns in data, these advanced models are less transparent. The vast majority of economists do not have the necessary resources or team to develop or manage these advanced models. Furthermore, the ‘black box’ nature of these models can make it challenging to understand exactly why a specific prediction was made, reducing the transparency of the research process.

Thus, the choice between a simpler model and a complex LLM may depend on various factors, including the availability of resources, the research question at hand, and the importance placed on transparency versus predictive power. It is a decision that requires careful consideration of the specific goals and constraints of each research project.

4. How should we evaluate the output of LLMs?

Traditional approaches to evaluating LLMs focus on metrics like perplexity, which evaluates how well a model reproduces sequences in language. Other common methods involve assessing performance on standard natural language processing (NLP) tasks such as solving language puzzles or math problems. However, for economists, these traditional measures may not fully capture the utility of an LLM.

As such, it may be beneficial to develop economics-specific tasks to evaluate these advanced language models. For instance, we could gauge the ability of an LLM to accurately interpret and summarise economic literature such as complex working papers or reports. Another test could involve predicting the impact of specific policy measures based on a given economic context. This would assess the model’s capacity to integrate diverse information and make economically sound predictions.

These are just examples, and the appropriate tests would ultimately depend on the specific use case. The key point is that the evaluation should be tailored to the context in which the LLM is intended to be used, to ensure it meets the needs and expectations of economists.

References

Ash, E, and S Hansen (2023), “Text algorithms in economics”, Annual Review of Economics, forthcoming.

Bajari, P, and Z Cen, V Chernozhukov, M Manukonda, J Wang, R Huerta, J Li, L Leng, G Monokroussos, S Vijaykunar, and S Wan (2021), “Hedonic prices and quality adjusted price indices powered by AI”, Cemmap Working Paper CWP04/21.

Bana, S H (2022), “Work2vec: Using language models to understand wage premia”.

Hansen, S, P Lambert, N Bloom, S Davis, R Sadun and B Taska (2023), “Remote work across jobs, companies, and space”, CEPR Discussion Paper 17964.

Ilzetski, E, and S Jain (2023), “The impact of artificial intelligence on growth and employment”, VoxEU.org, 20 June.