One of the most frequently voiced charges against social media platforms is that they have amplified existing societal tensions (e.g. Sunstein 2018). Encountering hateful content on social media is common, and there are concerns that such content can contribute to hateful actions offline. Indeed, recent empirical evidence suggests that negative sentiments on social networks can at times fuel violent attacks against ethnic and religious minorities (Müller and Schwarz 2019, 2021, Bursztyn et al. 2019). This evidence is consistent with an increasing number of studies showing that social media can affect real-life outcomes such as elections (Fujiwara et al. 2020), protests (Enikolopov et al. 2019), and mental health (Braghieri et al. 2022).

Social media companies have not sat idle in addressing these problems. While posting hate speech is officially prohibited on all major platforms, how content moderation policies should be implemented remains highly controversial: some people object that platforms are not moderating enough, while others are concerned about online censorship. To decide whether such policies are socially desirable, however, it is crucial to first understand whether they can effectively reduce online hate and its violent offline consequences. Indeed, the real-world effects of these policies might be a major component in thinking about the costs and benefits of such policies, given existing evidence that they play a small role in social media consumer surplus (Jiménez-Durán 2022). In recent research (Jiménez-Durán et al. 2022), we try to provide some first evidence on this question using a major 2017 legal change in Germany as a quasi-experiment.

A law to tame online hatred?

In 2017, the German parliament passed a sweeping new law aimed at reducing the toxicity of content on social network platforms. The Netzwerkdurchsetzungsgesetz (‘Act to Improve Enforcement of the Law in Social Networks’, or NetzDG) had the stated aim to “fight hate crime, criminally punishable fake news and other unlawful content on social networks more effectively”. Rather than proposing new rules, it made explicit that the legal codes governing certain types of insults, hate speech, and public incitement to commit crimes already applied in the offline world should also be enforced online by social media companies such as Facebook and Twitter.

Apart from requiring platforms to create frequent transparency reports, the law also enables the German government to fine social media networks up to €50 million if they fail to remove violating content. As such, the NetzDG can be interpreted as a major shock to social media platforms’ incentives to monitor and delete hateful content. Called “a key test for combatting online hate”, the NetzDG provides an almost ideal testing ground for understanding the effect of an increased takedown of hateful content on social networks. And it is this quasi-experimental setting we exploit to test the effect of online content moderation on online and offline hate.

Can moderation make online discourse less toxic?

The NetzDG was partially motivated by the spike in anti-refugee incidents that followed the 2015–2016 European refugee crisis, during which refugees faced systematic hate online. To measure which individuals were more strongly exposed to anti-refugee content online, we focus on users following the right-wing political party Alternative für Deutschland (AfD). In the third quarter of 2017, when the NetzDG came into effect, the AfD was the third-largest party in the German parliament, having risen on a platform of far-right anti-immigrant rhetoric, with a particular focus on refugees. Importantly, the AfD also had (and still has) far more Facebook followers than any other German party, making it a key voice in propagating anti-refugee content on social media.

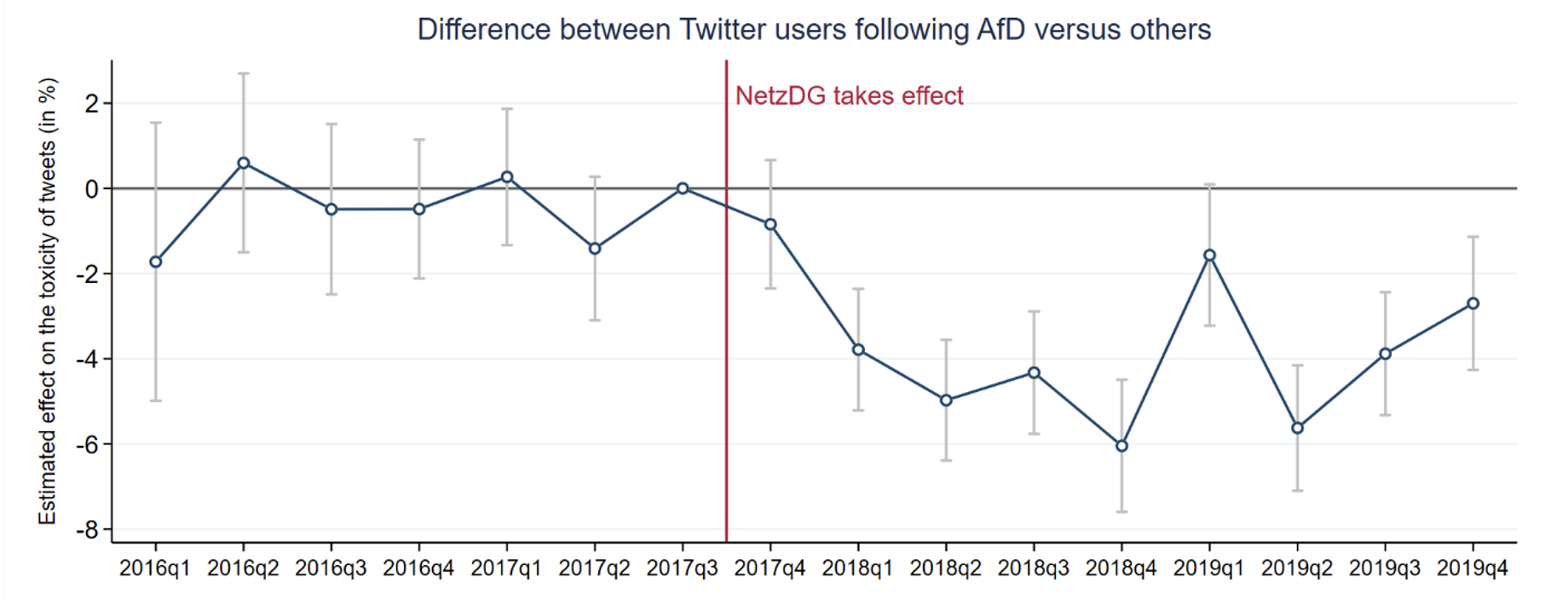

To estimate the effect of the NetzDG on the prevalence of online hate speech, we compare the toxicity of tweets about refugees sent by followers of the AfD to other Twitter users. Figure 1 shows the toxicity of AfD followers’ refugee tweets relative to those sent by other Twitter users around the time the NetzDG was enacted. We find a clear reduction in the toxicity of tweets by AfD followers as soon as the NetzDG came into force, with no clear pre-existing trends before.

Figure 1 The effect of the NetzDG on the toxicity of tweets about refugees

These estimates imply that the law reduced this relative toxicity by around 8%. This finding is consistent with recent suggestive evidence that the toxicity of far-right German Twitter users decreased after the NetzDG relative to a set of Austrian Twitter users (Andres and Slivko 2021).

The offline consequences of curbing hate speech

The previous result suggests that the NetzDG likely made online discourse about refugees more civil. An even more important question is whether the reduced online toxicity also had real-life consequences. For our main analysis, we thus investigate the effect of the NetzDG on hate crimes against refugees, exploiting municipality-level differences in the exposure to far-right social media content. If the NetzDG reduced online hate speech, one would expect a larger decrease in the number of anti-refugee incidents in areas where more people were exposed to hateful content in the first place.

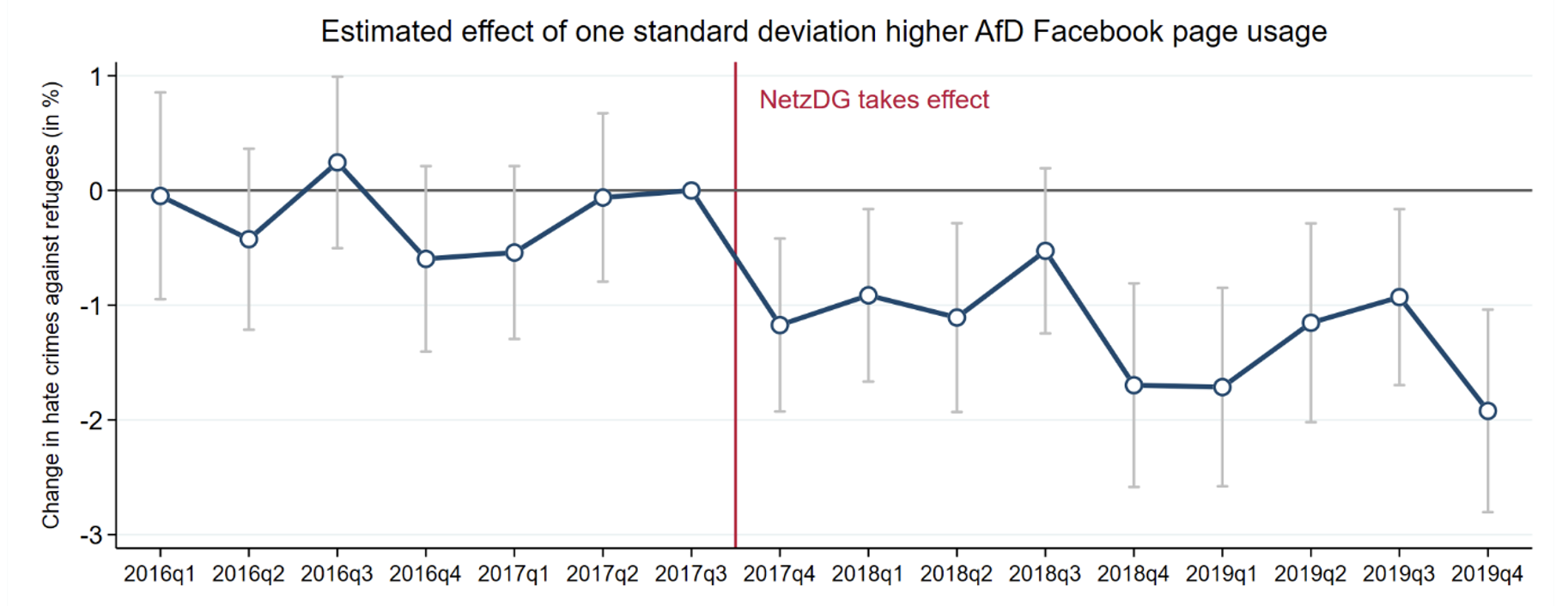

We test this conjecture using data on the popularity of the AfD Facebook page across towns. Consistent with a causal effect of the NetzDG, we find a disproportionate drop in anti-refugee hate crimes in cities where the page is more popular: a one-standard-deviation-higher number of AfD Facebook followers per capita is associated with an approximate 1% reduction in the number of anti-refugee incidents. This effect is visualised in Figure 2, which plots changes in hate crime depending on a town’s AfD Facebook followers around the introduction of the NetzDG.

Figure 2 The effect of the NetzDG on anti-refugee hate crimes

Importantly, however, we find no systematic changes in hate crimes depending on a town’s total number of Facebook users or the AfD’s vote share. This finding suggests that right-wing social media usage, specifically, is what matters for the reduction in offline hate following more stringent online content moderation.

Quo vadis, content moderation?

Twitter’s recent takeover by Elon Musk has reignited interest in how social media platforms should moderate the content on their platforms. A focal point is whether there is too much or too little ‘free speech’ or ‘hate speech’ on these platforms. Germany’s experience with the NetzDG – an increase in government-mandated but privately implemented censorship of hateful content – might be instructive. Clearly, making a greater effort to delete toxic content imposes costs on platform providers and the government, and there are legitimate concerns about partisan implementation and infringement on freedoms of expression (Wall Street Journal 2020). That said, the findings from our research suggest that online content moderation indeed ‘works’ in reducing online hate and its offline consequences.

References

Andres, R, and O Slivko (2021), “Combating online hate speech: The impact of legislation on Twitter”, ZEW-Centre for European Economic Research Discussion Paper 21-103.

Braghieri, L, R Levy, and A Makarin (2022), “Social media and mental health”, VoxEU.org, 22 July.

Bursztyn, L, G Egorov, R Enikolopov, and M Petrova (2019), “Social media and xenophobia: Evidence from Russia”, NBER Working Paper w26567.

Enikolopov, R, A Makarin, and M Petrova (2019), “Social media and protest participation: Evidence from Russia”, VoxEU.org, 17 December.

Fujiwara, T, K Müller, and C Schwarz (2020), “How Twitter affected the 2016 presidential election”, VoxEU.org, 30 October.

Jiménez-Durán, R (2022), “The economics of content moderation: Theory and experimental evidence from hate speech on Twitter”, available at SSRN.

Jiménez-Durán, R, K Mueller, and C Schwarz (2022), “The effect of content moderation on online and offline hate: Evidence from Germany’s NetzDG”, CEPR Discussion Paper DP17554.

Müller, K, and C Schwarz (2019), “From hashtag to hate crime: Twitter and anti-minority sentiment”, working paper.

Müller, K, and C Schwarz (2021), “Fanning the flames of hate: Social media and hate crime”, Journal of the European Economic Association 19(4): 2131–67.

Wall Street Journal (2020), “Twitter’s partisan censors”, 15 October.

Sunstein, C R (2018), #Republic: Divided democracy in the age of social media, Princeton University Press.