Social media applications make it easy to show support for moral causes: with a few taps of a phone screen, we can retweet a video of police brutality, post a black square to Instagram with the hashtag #blackouttuesday, and celebrate women by promoting International Women’s Day. Some would say that social media applications make it too easy to show support for moral causes, enabling us to declare support without any personal sacrifice. Hypocrisy could then be widespread. Do people back up their words with actions? What do virtue signals actually signal?

In a recent paper (Angeli et al 2023), we answer this question in the context of nearly 20,000 Twitter-using academics based in the US. We scraped all of their recent tweets, classifying 63% of academics as ‘vocal’ (those who tweeted about racial justice), and the remaining 37% as ‘silent’. Importantly, almost all the racial justice tweets we identify showed support for racial justice efforts, allowing us to treat the vocal academics as having declared support for racial justice on Twitter. We linked each academic with two measures of offline behaviour: discriminatory conduct as measured in an audit study, and political contributions.

We included 11,450 non-Black academics in the audit study. We sent each academic an email, purporting to be a student requesting a Zoom meeting to discuss graduate studies. We chose student names to be distinctively Black- versus white-sounding, and distinctively female- versus male-sounding. We randomised which student name was used for each academic; in a random half of the emails, we also included a sentence self-identifying the sender as a first-generation college student. The study allows us to measure discrimination in meeting acceptance along three dimensions: race, gender, and first-generation status.

Audit studies – which require deception, a clear moral cost – are rare among US-based professors. A recent meta-analysis of audit studies measuring racial discrimination in the US (Gaddis et al. 2021) found only one study with professors carried out over ten years ago (Milkman et al. 2012) . Our audit study thus provides a useful update to our understanding of discrimination in academia, as well as the first analysis of how social media activity predicts discriminatory behaviour offline.

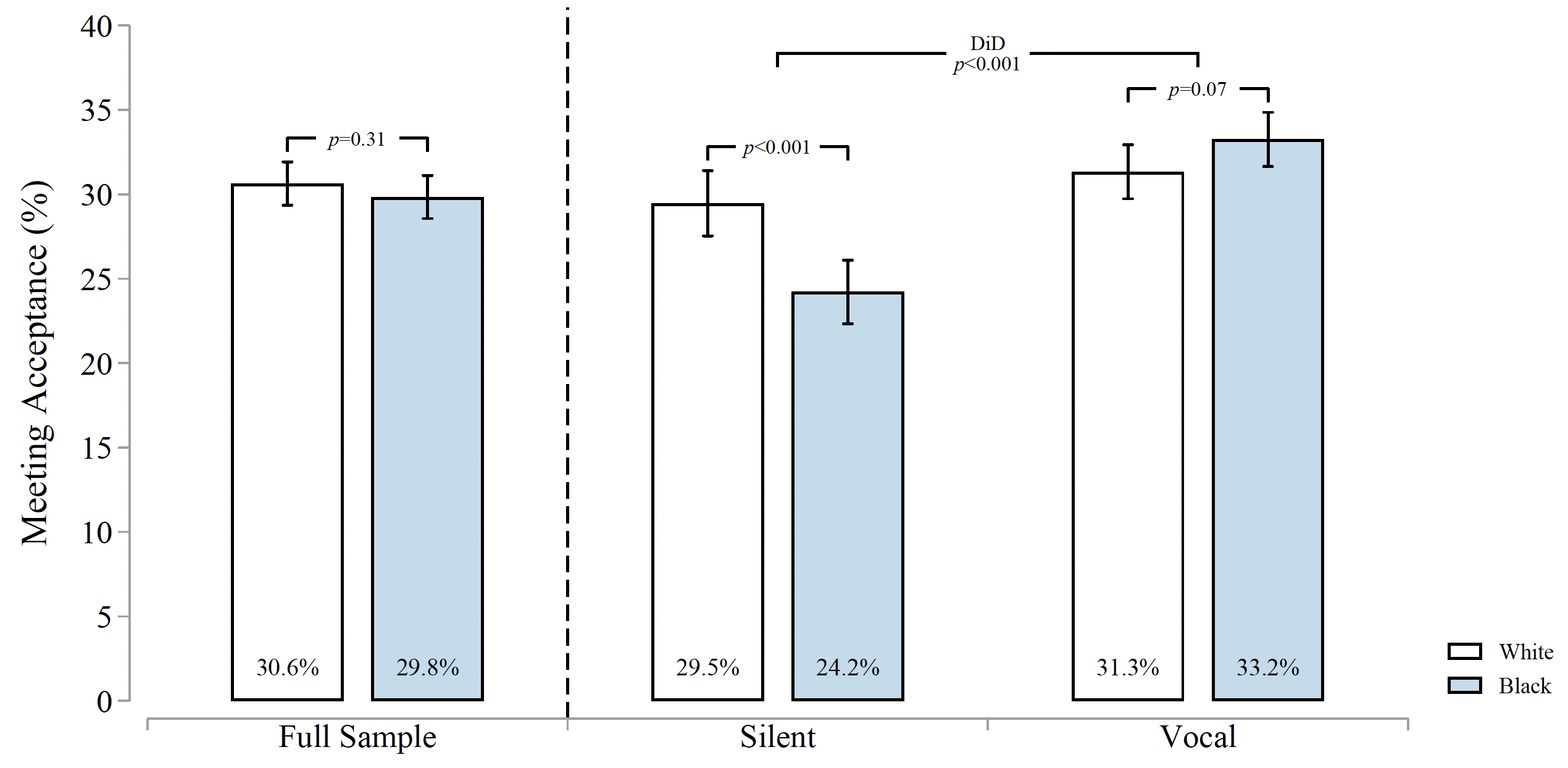

In stark contrast to Milkman et al. (2012), we find no evidence of racial discrimination in meeting acceptance overall. Academics accept 30.6% of meetings from distinctively white names, and 29.8% from distinctively Black names. The difference is not statistically significant (Figure 1).

The overall null effect masks differences between silent and vocal academics. Silent academics are 18% less likely to accept a meeting with a Black student than with a white student, while vocal academics are 6% more likely to accept a meeting with a Black student than with a white student. Virtue signals are not vacuous – academics back up their tweets with behaviour.

Figure 1 Silent academics discriminate against Black students, vocal academics discriminate (somewhat) against white students

Notes: Bars show what percentage of audited academics accepted meeting requests from distinctively white and distinctively Black names. The full sample includes the 11,450 audited academics (4,318 silent and 7,132 vocal). Vocal academics are those who tweeted at least once about racial justice from January 2020 to March 2022. Silent academics are those who did not tweet about racial justice.

Tweets also signal support for students from other underrepresented groups. Audited academics discriminate 11% in favour of women and 15% in favour of first-generation students. In both cases, vocal academics discriminate more in favour of the minority group than silent academics. We learn that racial justice tweets are not signals of unbiasedness – for the cases of gender and first-generation status, vocal academics are actually more biased than silent academics, but this time in favour of minority groups.

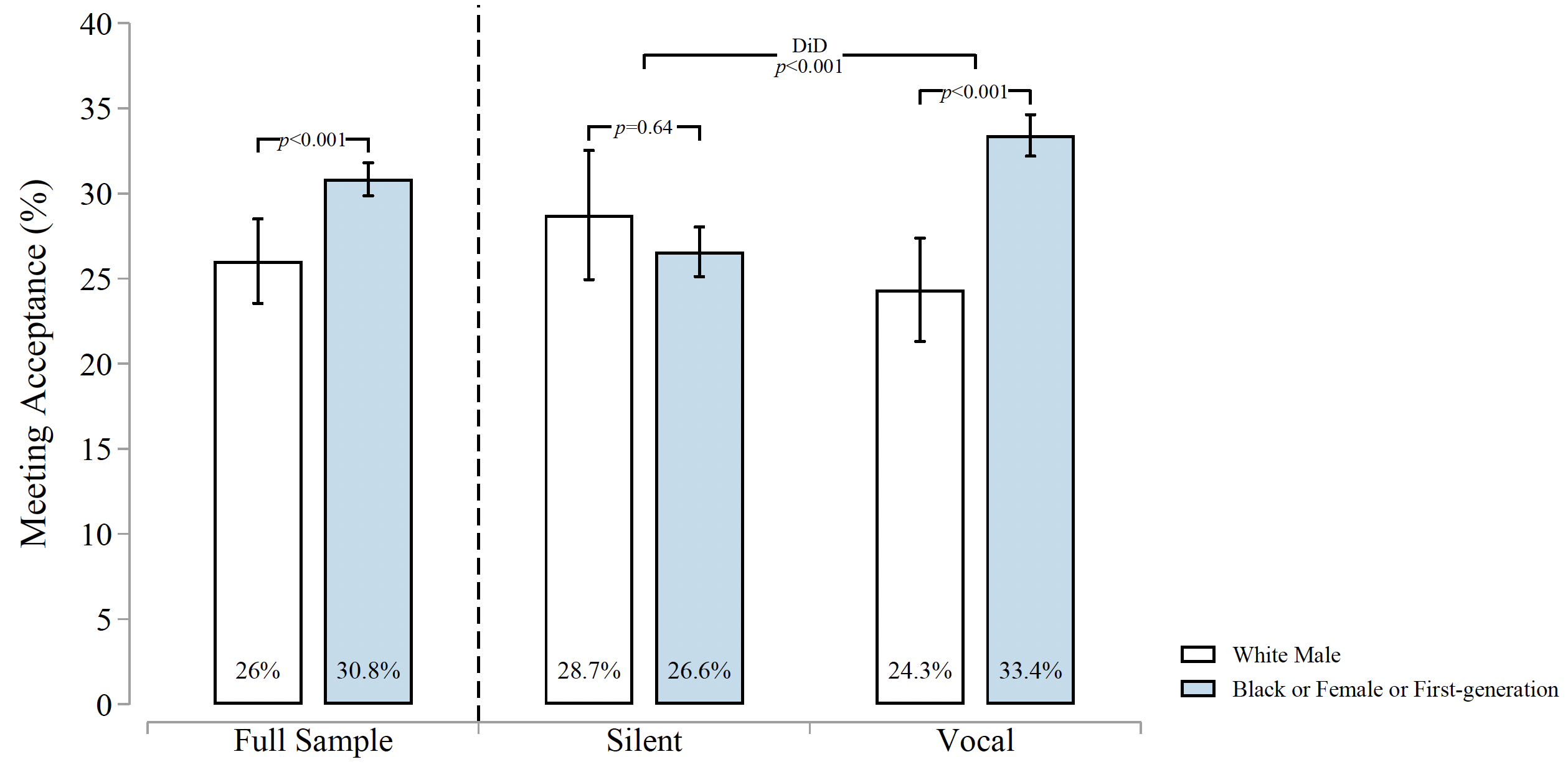

To show more clearly the treatment of minority students relative to non-minority students, we can group our emails into those from a student with any minority status (Black or female or first-generation), and compare them with emails from non-minority students (white and male). Silent academics treat minority and non-minority students similarly (Figure 2).

Vocal academics favour minority students by 37%.

Figure 2 Vocal academics favour minority students

Notes: Bars show what percentage of audited academics accepted meeting requests from distinctively white male names with no mention of first-generation status (1/8 of emails) versus emails from distinctively Black or female names, or emails that mention first-generation status (7/8 of emails).

These results are similar when we restrict to comparisons between similar academics. After controlling for other variables – including Twitter activity, gender, ethnicity, and field – racial justice-tweeting academics still discriminate more in favour of minority students than other academics (see Figure 6 in Angeli et al. 2023).

While audit studies deliver gold-standard measures of discrimination in principle, an important concern when running an audit study with academics is detection. If academics realise they are in an audit study, and act differently as a result, our measures of discrimination might be misleading. Fortunately, our results are very similar after dropping the academics familiar with the audit methodology (i.e. social scientists; for this and other exercises, see Figure 7 in Angeli et al. 2023).

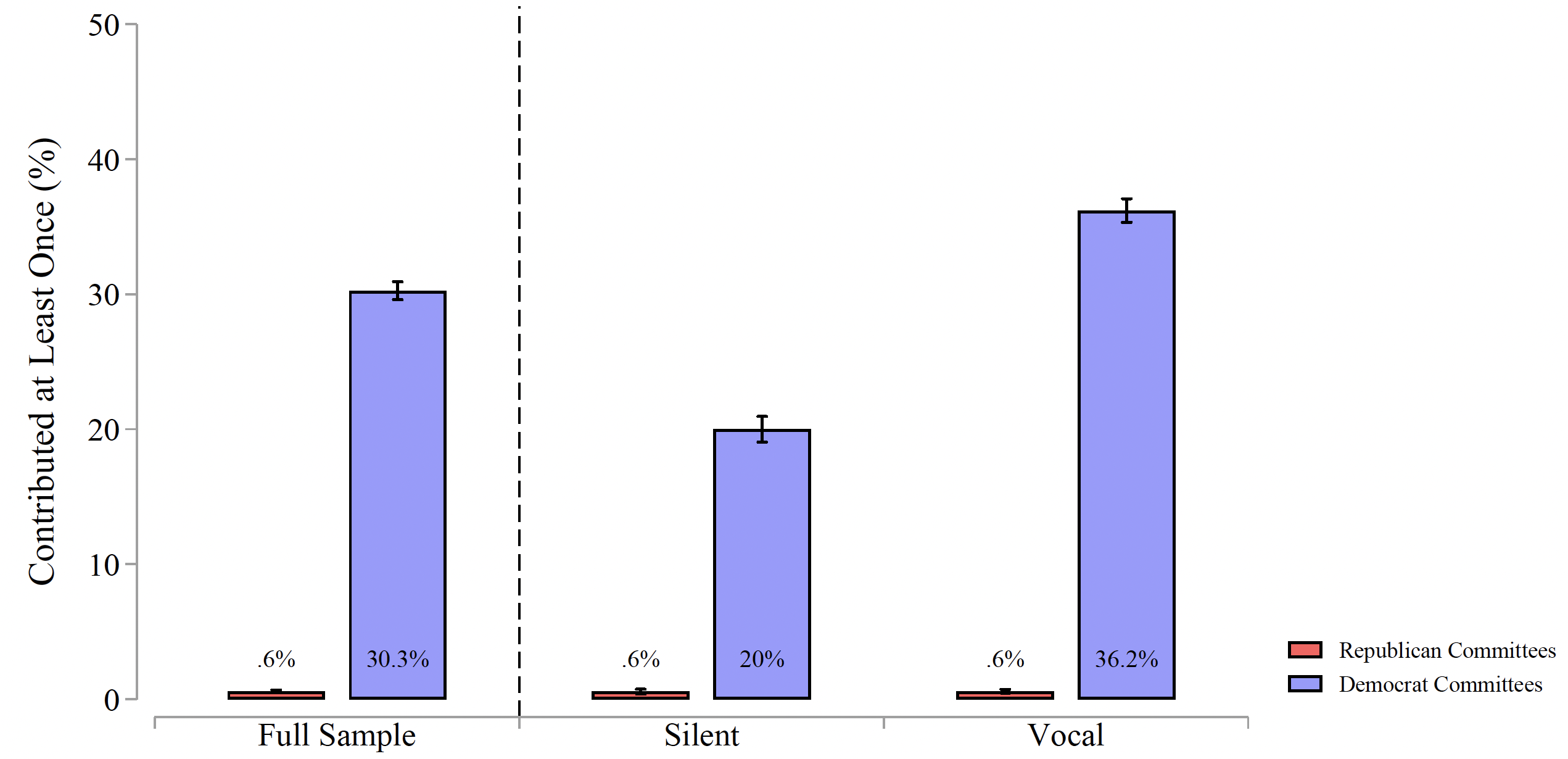

Turning to political behaviour, vocal academics are almost twice as likely as silent academics to have contributed to Democrats, while vanishingly few academics contribute to Republicans (Figure 3). To explore racial bias in giving, we turn to a case study: the 2021 Georgia Senate runoffs, with Jon Ossoff (a white Democratic candidate) and Raphael Warnock (a Black Democratic candidate). While the two candidates differ in race, they share a party and state, ran and won close elections, and voted similarly once in office. In contrast to the audit results, we find that both vocal and silent academics are slightly less likely to give money to Warnock than to Ossoff. It follows that virtue signals align with discriminatory behaviours in the workplace, but not racial bias in political giving.

Figure 3 Tweeting academics almost never contribute to Republican candidates, while vocal academics are more active

Notes: Bars show what percentage of academics made FEC-reported political contributions to Republican and Democratic committees from January 2020 to March 2022. The full sample includes 18,513 academics, a larger sample than that included in the audit study. Silent includes the 6,783 academics who did not tweet about racial justice during the same time period; vocal includes the 11,730 academics who tweeted about racial justice.

Our audit results tell us that social media is informative: we learn a lot about an academic’s real-world behaviours when we see them tweet in support of racial justice. But given the frequent accusations of hypocrisy in the virtual world, we might ask: do social media users recognise the information value of racial justice tweets? To answer this question, we surveyed 1,752 US-based graduate students, asking them to predict the difference in audit-measured behaviour between vocal and silent academics. Students were pessimistic, predicting that both vocal and silent academics would discriminate against Black students. We see a ‘wisdom of crowds’ effect for the difference in behaviour between vocal and silent academics: on average, student predictions were close to correct. Despite this, most students get it wrong individually, with 71% predicting a difference in discrimination outside of our 95% confidence interval. The most common mistake is to underestimate the difference in discriminatory behaviour between vocal and silent academics – these students too often believe that virtue signals are vacuous.

Tweets predict actions, at least for academics. How general is this finding? Speculating, we might expect the tweets of corporations to be less predictive. Somewhat consistent with this, Edmans et al. (2023) find that visible metrics of diversity, equity, and inclusion policies are at best weakly correlated with metrics based on confidential employee responses. In ongoing work, we also find that UK firms tweeting about International Women’s Day have similar gender pay gaps to those not tweeting. Future work might clarify exactly when and where virtue signals predict costly actions.

Authors' note: The Village Team is a team of over 100 research assistants led by Carla Colina, Jordan Hutchings, Noor Kumar, Ines Moran, Saloni Sharma, Aurellia Sunarja, Chihiro Tanigawa, Akash Uppal, and Kevin Yu (see Appendix A in Angeli et al. (2023) for a full list).

References

Angeli, D, M Lowe and the Village Team (2023), “Virtue Signals”, working paper.

Edmans, A, C Flammer and S Glossner (2023), “The Value of Diversity, Equity, and Inclusion”, VoxEU.org, 11 June.

Gaddis, S M, E Larsen, C Crabtree and J Holbein (2021), “Discrimination against black and Hispanic Americans is highest in hiring and housing contexts: A meta-analysis of correspondence audits”, SSRN 3975770.

Milkman, K L, M Akinola and D Chugh (2012), “Temporal distance and discrimination: An audit study in academia”, Psychological Science 23 (7): 710–717.