Artificial intelligence (AI) will likely be of considerable help to the financial authorities if they proactively engage with it. But if they are conservative, reluctant, and slow, they risk both irrelevance and financial instability.

The private sector is rapidly adopting AI, even if many financial institutions signal that they intend to proceed cautiously. Many financial institutions have large AI teams and invest significantly; JP Morgan reports spending over $1 billion per year on AI, and Thomson Reuters has an $8 billion AI war chest. AI helps them make investments and perform back-office tasks like risk management, compliance, fraud detection, anti-money laundering, and ‘know your customer’. It promises considerable cost savings and efficiency improvements, and in a highly competitive financial system, it seems inevitable that AI adoption will grow rapidly.

As the private sector adopts AI, it speeds up its reactions and helps it find loopholes in the regulations. As we noted in Danielsson and Uthemann (2024a), the authorities will have to keep up if they wish to remain relevant.

So far, they have been slow to engage in their approach to AI and will find adopting AI challenging. It requires cultural and staff changes, supervision will have to change, and very significant resources will have to be allocated.

Pros and cons of AI

We see AI as a computer algorithm performing tasks usually done by humans, such as giving recommendations and making decisions, unlike machine learning and traditional statistics, which only provide quantitative analysis. For economic and financial applications, it is particularly helpful to consider AI as a rational maximising agent, one of Norvig and Russell's (2021) definitions of AI.

AI has particular strengths and weaknesses. It is very good at finding patterns in data and reacting quickly, cheaply, and usually reliably.

However, that depends on its having access to relevant data. The financial system generates an enormous amount of data, petabytes daily. But that is not sufficient. A financial sector AI working for the authorities should also draw knowledge from other domains such as history, ethics, law, politics, and psychology, and to make connections between different domains, it will have to be trained on data that contain such connections. Even if we can do so, we don't know how AI that has been fed with knowledge from a wide set of domains and high-level objectives will perform. When made to extrapolate, its advice might be judged as entirely wrong or even dangerous by human experts.

Ultimately, this means that when extrapolating from existing knowledge, the quality of its advice should be checked by humans.

How the authorities can implement AI

The financial authorities hold a lot of public and private information that can be used to train AI, as discussed in Danielsson and Uthemann (2024b), including:

1. Observations on past compliance and supervisory decisions

2. Prices, trading volumes, and securities holdings in fixed-income, repo, derivatives, and equity markets

3. Assets and liabilities of commercial banks

4. Network connections, like cross-institution exposures, including cross-border

5. Textual data

- The rulebook

- Central bank speeches, policy decisions, staff analysis

- Records of past crisis resolution

6. Internal economic models

- Interest rate term structure models

- Market liquidity models

- Inflation, GDP, and labour market forecasting models

- Equilibrium macro model for policy analysis

Data are not sufficient; it also requires considerable human resources and compute. Bloomberg reports that the median salary for specialists in data, analytics, and artificial intelligence in US banks was $901,000 in 2022 and $676,000 in Europe, costs outside the reach of the financial authorities. This is similar to what the highest-paid central bank governors earn. Technical staff earn much less (see for example Borgonovi et al. 2023 for a discussion on the AI skill market).

However, it is easy to overstate these problems. The largest expense is training AI on large publicly available text databases. The primary AI vendors already meet that cost, and the authorities can use transfer learning to augment the resulting general-purpose engines with specialised knowledge at a manageable cost.

Taking advantage of AI

There are many areas where AI could be very useful to financial authorities.

It can help micro authorities by designing rules and regulations and enforcing compliance with these rules. While human supervisors would initially make enforcement decisions, reinforcement learning with human feedback will help the supervisory AI become increasingly performant and, hence, autonomous. Adversarial architectures such as generative adversarial networks might be particularly beneficial in understanding complex areas of authority-private sector interactions, such as fraud detection.

AI will also be helpful to the macro authorities, such as in advising on how to best cope with stress and crises. They can run simulation scenarios on alternative responses to stress, advise on and implement interventions, and analyse drivers of extreme stress. The authorities could use generative model models as artificial labs to experiment on policies and evaluate private sector algorithms.

AI will also be useful in ordinary economic analysis and forecasting, achievable with general-purpose foundation models augmented via transfer learning using public and private data, established economic theory, and previous policy analysis. Reinforcement learning with feedback from human experts is useful in improving the engine. Such AI would be very beneficial to those conducting economic forecasting, policy analysis and macroprudential stress tests, to mention a few.

Risks arising from AI

AI also brings with it new types of risk, particularly in macro (e.g. Acemoglu 2021). A key challenge in many applications is that the outcome needs to cover behaviour that we rarely observe, if at all, in available data, such as complicated interrelations between market participants in times of stress.

When AI does not have the necessary information in its training dataset, its advice will be constrained by what happened in the past while not adequately reflecting new circumstances. This is why it is very important that AI reports measures of statistical confidence for its advice.

Faced with all those risks, the authorities might conclude that AI should only be used for low-level advice, not decisions, and take care to keep humans in the loop to avoid undesirable outcomes. However, that might not be as big a distinction as one might think. Humans might not understand AI's internal representation of the financial system. The engine might also act so as to eliminate the risk of human operators making inferior choices, in effect becoming a shadow decision-maker.

While an authority might not wish to get to that point, its use of AI might end up there regardless. As we come to trust AI analysis and decisions and appreciate how cheaply and well it performs in increasingly complex and essential tasks, it may end up in charge of key functions. Its very success creates trust. And that trust is earned on relatively simple and safe repetitive tasks.

As trust builds up, the critical risk is that we become so dependent on AI that the authorities cannot exercise control without it. Turning AI off may be impossible or very unsafe, especially since AI could optimise to become irreplaceable. Eventually, we risk becoming dependent on a system for critical analysis and decisions we don't entirely, or even partially, understand.

Six criteria for AI use in financial policy

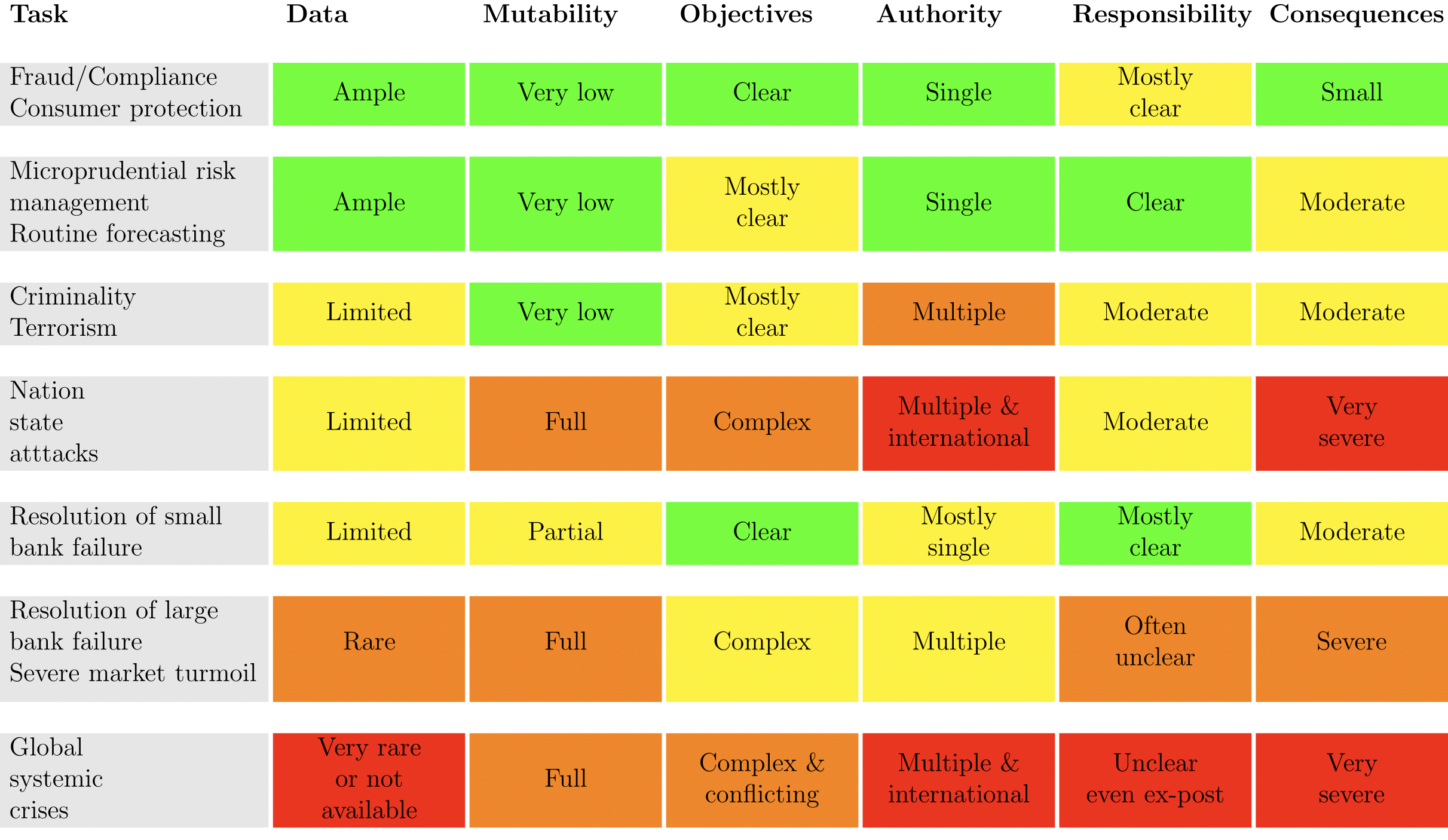

These issues take us to six criteria for evaluating AI use in financial policy.

- Data. Does an AI engine have enough data for learning, or are other factors materially impacting AI advice and decisions that might not be available in a training dataset?

- Mutability. Is there a fixed set of immutable rules the AI must obey, or does the regulator update the rules in response to events?

- Objectives. Can AI be given clear objectives and its actions monitored in light of those objectives, or are they unclear?

- Authority. Would a human functionary have the authority to make decisions, does it require committee approval, or is a fully distributed decision-making process brought to bear on a problem?

- Responsibility. Does private AI make it more difficult for the authorities to monitor misbehaviour and assign responsibility in cases of abuse? In particular, can responsibility for damages be clearly assigned to humans?

- Consequences. Are the consequences of mistakes small, large but manageable, or catastrophic?

We can then apply these criteria to particular policy actions, as shown in the following table.

Table 1 Particular regulatory tasks and AI consequences

Conclusion

AI will be of considerable help to the financial authorities, but there is also significant risk of authorities losing control due to AI.

The financial authorities will have to change how they operate if they wish to remain effective overseers of the financial system. Many authorities will find that challenging. AI will require new ways of regulating, with different methodologies, human capital, and technology. The very high cost of AI and the oligopolistic nature of AI vendors present particular challenges. If then the authorities are reluctant and slow to engage with AI, they risk irrelevance.

However, when the authorities embrace AI, it should be of considerable benefit to their mission. The design and execution of micro-prudential regulations benefit because the large volume of data, relatively immutable rules, and clarity of objectives all contribute to AI's strength.

It is more challenging for macro. AI will help scan the system for vulnerabilities, evaluate the best responses to stress, and find optimal crisis interventions. However, it also carries with it the threats of AI hallucination and, hence, inappropriate policy responses. It will be essential to measure the accuracy of AI advice. It will be helpful if the authorities overcome their frequent reluctance to adopt consistent quantitative frameworks for measuring and reporting on the statistical accuracy of their data-based inputs and outputs.

The authorities need to be aware of AI benefits and threats and incorporate that awareness into the operational execution of the services they provide for society.

Authors’ note: Any opinions and conclusions expressed here are those of the authors and do not necessarily represent the views of the Bank of Canada.

References

Acemoglu, A (2021), "Dangers of unregulated artificial intelligence", VoxEU.org, 23 November.

Borgonovi, F, F Calvino, C Criscuolo, J Nania, J Nitschke, L O’Kane, L Samek and H Seitz (2023), "Tracking the skills behind the AI boom: Evidence from 14 OECD countries", VoxEU.org, 20 October.

Danielsson, J and A Uthemann (2024a), "How AI can undermine financial stability", VoxEU.org, 22 January.

Danielsson, J and A Uthemann (2024b), "On the use of artificial intelligence in financial regulations and the impact on financial stability".

Norvig, P and S Russell (2021), Artificial Intelligence: A Modern Approach, Pearson.